Zee Prime Capital: Distributed Storage and Business Models in Web3

Source: Filecoin Network

Note: This article is a reprint. Original article published by Luffistotle on June 7, 2023, on the Zee Prime Capital platform. Zee Prime Capital is a venture capital firm investing in programmable assets and early entrepreneurs globally, positioning itself as forward-thinking and resilient (which we believe is true), focusing on hot areas like programmable assets and collaborative intelligence. Luffistotle is one of the investors at Zee Prime Capital. This article represents the author's personal views, and reprinting has been authorized by the author.

All computing stacks need storage; otherwise, everything is just talk. As computing resources continue to increase, a large amount of underutilized storage space has emerged. Distributed Storage Networks (DSN) can coordinate and utilize these potential resources, transforming them into productive assets. These networks have the potential to introduce the first true commercial verticals to the Web 3 ecosystem.

History of P2P Development

The emergence of Napster marked the entry of P2P file sharing into the mainstream. While there were other file-sharing methods before, Napster's MP3 file sharing drove the popularity of P2P. Since then, distributed systems have developed rapidly. Due to the centralization of the Napster model (used for indexing), it was easily shut down for legal reasons, but it laid the foundation for more robust file-sharing methods.

The Gnutella protocol followed this idea, with many different front-ends utilizing the network in their own ways. As a more distributed Napster-like query network, Gnutella was better able to withstand censorship, which was validated at the time. AOL acquired the rising Nullsoft, realizing its potential and decisively canceled its release. However, the product had already leaked and was quickly reverse-engineered, leading to well-known front-end applications like Bearshare, Limewire, and Frostwire. Ultimately, the failure of such applications was due to bandwidth requirements (which were scarce at the time) and a lack of activity and content guarantees.

Remember? If not, it has been reborn as an NFT marketplace (https://limewire.com/product)……

The next evolution was BitTorrent, an upgrade thanks to the bidirectional nature of the protocol and its ability to maintain a Distributed Hash Table (DHT). The importance of DHT lies in its function as a distributed ledger that stores the locations of files, allowing other participating nodes in the network to look them up.

After the birth of Bitcoin and blockchain, people began to envision whether this new coordination mechanism could connect potential unused resources and commodity networks, leading to the emergence of Distributed Storage Networks (DSN).

In fact, many people do not realize that the starting point for tokens and P2P networks was not Bitcoin and blockchain. The original creators of P2P networks had already recognized the following points:

Due to the existence of forks, it is very difficult to build useful protocols and then monetize them. Even monetization through front-end advertising would be undercut by forks.

There is a significant disparity in usage. For example, in Gnutella, 70% of users do not share files, while 50% of requests are concentrated on files hosted by the top 1% of hosts.

Power Law

How to solve these problems? BitTorrent started with the sharing ratio (download/upload ratio), while other protocols introduced primitive token systems. They often referred to tokens as credits or points, incentivizing good behavior (promoting the healthy development of the protocol) and maintaining the network (for example, managing content through credibility ratings) through token distribution. Regarding the history in this area, I strongly recommend reading John Backus's articles (now deleted but available through the Internet Archive):

It is worth mentioning that the initial vision of Ethereum included Distributed Storage Networks (DSN), referring to it as the "Trinity," aimed at providing the necessary tools for the prosperous development of the World Computer. Rumor has it that Gavin Wood proposed the concept of Swarm as the storage layer and Whisper as the messaging layer.

In summary, mainstream Distributed Storage Networks were born. The rest is history.

Landscape of Distributed Storage Networks

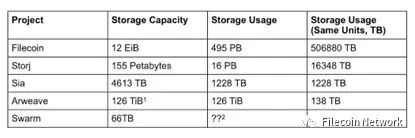

The market landscape of Distributed Storage Networks is quite interesting, with a gap between the scale of the leading player (Filecoin) and emerging storage networks. In the general public's impression, the storage field is dominated by two giants, Filecoin and Arweave, but in terms of usage, Arweave ranks fourth, behind Storj and Sia (although Sia's usage seems to be declining). While we can question the authenticity of the data on Filecoin, even with a 90% discount, Filecoin's usage is still about 400 times that of Arweave.

What can we infer from this?

First, there are currently dominant players in the market, but whether this dominance can continue depends on whether the storage resources are useful.

These Distributed Storage Networks (DSN) generally use the same architecture, where node operators possess a large amount of unused storage assets (hard drives) that they can pledge to mine blocks and earn mining rewards by storing data. Pricing and methods for achieving permanent storage can vary, but the most important differentiation is enabling users to easily and cost-effectively retrieve and process stored data.

Comparison of storage network capacity and usage

Note:

1. The capacity of Arweave cannot be directly measured; however, its mechanism encourages node operators to ensure sufficient buffer space to meet demand. So how large is this buffer? Since it cannot be measured, we cannot determine it.

2. The actual network usage of Swarm is uncertain; we can see the amount of paid storage space, but whether it is used is unknown.

The projects in the table are all operational, and there are also some planned Distributed Storage Networks (DSN), such as ETH Storage and MaidSafe.

FVM

Before discussing FVM, we must mention the recently launched FEVM (Filecoin Ethereum Virtual Machine) by Filecoin. FEVM is a hypervisor-based concept that supports many other runtime WASM virtual machines. For example, FEVM is the Ethereum Virtual Machine runtime based on the FVM/FIL network. The reason FEVM is noteworthy is that it has facilitated an explosion of activities related to smart contracts (i.e., stuff) on FIL. Before the launch of FEVM in March, there were basically only 11 active smart contracts on FIL, but after the launch of FVM, the number of smart contracts surged. The benefits of composability became evident, as work done in Solidity could be used to build new businesses on top of FIL, leading to various innovations, such as the quasi-liquid staking primitives developed by the GLIF team and innovations in market financialization. We believe FVM will accelerate the growth of storage providers, as capital efficiency has improved (storage providers need FIL to actively provide storage/encapsulate storage transactions). Unlike traditional LSD, the credit risk of individual storage providers needs to be assessed.

Permanent Storage

I believe Arweave has the loudest voice in this regard, as its slogan hits at the deepest desires of Web 3 participants: permanent storage.

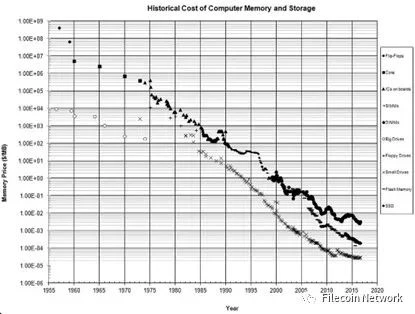

But what does permanent storage really mean? It is undoubtedly an appealing feature; however, in reality, execution is everything. The ability to execute hinges on sustainability and the costs to end users. Arweave adopts a one-time payment model for permanent storage (200 years of upfront payment + the assumption of diminishing storage value). This pricing model is suitable for assets in a deflationary pricing environment, relying on continuous goodwill appreciation (i.e., old transactions subsidizing new transactions), but in an inflationary environment, it is quite the opposite. Historically, this pricing model has not been problematic because the cost of computer storage has consistently declined since its inception, but considering only hard drive costs is not comprehensive.

Arweave creates permanent storage through the incentive mechanism of the Succinct Proof of Random Access (SPoRA) algorithm, which encourages miners to store all data and prove they can randomly generate historical blocks. This increases the probability of miners being selected to create the next block (and receive corresponding rewards).

While this mechanism may lead node operators to want to store all data, it does not guarantee they will do so. Even with high redundancy and conservative probing methods to determine model parameters, the risk of potential loss can never be completely eliminated.

The only way to achieve permanent storage is to explicitly compel someone (or everyone) to execute it; failure to execute will lead to exclusion. How to incentivize people to take on this responsibility? The probing method itself is not problematic, but the best way to implement permanent storage and pricing still needs exploration.

After some groundwork, we ultimately need to ask what level of security people can accept for permanent storage, and then consider pricing within a given timeframe. In reality, consumer preferences will always fall within the replication spectrum (permanence), and they should be able to choose the level of security and receive corresponding pricing.

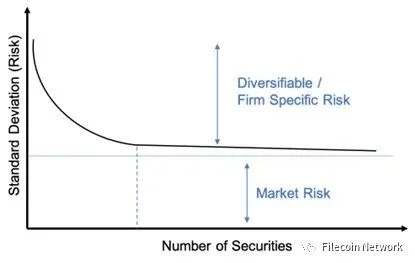

Traditional investment literature and research have fully demonstrated the benefits of diversification in reducing overall portfolio risk. Initial diversification can reduce portfolio risk, but over time, the benefits of adding another stock become negligible.

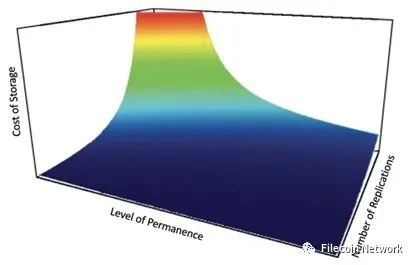

I believe that in Distributed Storage Networks, if the amount of replication does not correlate with the cost and security of storage, the pricing for storage beyond the default replication number should resemble the curve in the image,

For future developments, I look forward to seeing what opportunities a DSN with easily accessible smart contracts can bring to the permanent storage market. I believe that if the market opens up different options for permanent storage, consumers will benefit.

We can view the green area in the above image as an experimental field, where it may be possible to achieve an exponential decrease in storage costs without significantly altering the amount of replication and permanence.

Achieving permanent storage can also be done through replication across different storage networks, rather than just within a single network. This path requires ambition but will naturally create different tiers of permanent storage. The biggest question here is whether we can make permanent storage ubiquitous across Distributed Storage Networks, just as stock portfolios diversify market risk, making permanent storage a free lunch.

The possibility certainly exists, but we must consider overlapping node providers and other complex factors. Insurance forms could also be considered, such as requiring node operators to bear higher penalty conditions in exchange for guarantees. Such systems are also not easy to maintain, as they involve coordinating multiple codebases. Nevertheless, we look forward to the promotion of such designs, advancing the concept of permanent storage across the industry.

The First Commercial Market in Web3

Matti recently stated in a tweet that storage is a use case bringing tangible commercial value to Web3. I think it is possible.

Recently, I spoke with a Layer 1 blockchain team, and I told them that as L1 managers, they have an obligation to fill block space, but more importantly, to achieve this through economic activity. This line often overlooks the second part of its name, which is the monetary part.

Any protocol that issues tokens needs to ensure that the tokens support some form of economic activity to avoid being shorted. For L1 protocols, their native tokens are used to handle payments (executing computations) and charge corresponding gas fees. The more economic activity there is, the more gas is consumed, and the greater the demand for tokens. This is the crypto-economic model, and other protocols may choose to provide SaaS as an intermediary layer.

When the crypto-economic model combines with specific commodities, the effect is particularly pronounced; for Layer 1 protocols, that commodity is computation. However, when it comes to financial transactions, the price fluctuations of execution can be a huge blow to user experience. In financial transactions like swap exchanges, execution costs should be the least important part.

Given the poor user experience, it is very difficult to fill block space relying on economic activity. While scaling solutions are continuously emerging to help address this issue (I strongly recommend reading the Interplanetary Consensus White Paper, in PDF format), the Layer 1 market is saturated, making it challenging for any protocol to achieve sufficient economic activity.

When computing power is combined with some additional commodity, the problem becomes simpler. In the case of Distributed Storage Networks, the commodity is clearly storage space. Data storage and its derivatives in finance and securitization can immediately fill the gap in economic activity.

However, distributed storage also needs to provide effective solutions for traditional enterprises, especially those that need to comply with data storage regulations. This requires consideration of audit standards, geographical restrictions, and optimizing user experience.

We discussed Banyan (https://banyan.computer/) in Part 2 of our middleware paper (https://zeeprime.capital/web-3-middleware), whose products are actually on the right track in this regard. They collaborate with DSN node operators to obtain SOC certification for the storage they provide and offer a simple user interface to optimize file uploads.

But that’s not enough.

The content stored must also be easily accessible through an efficient retrieval market. Zee Prime is optimistic about the prospects of building a Content Delivery Network (CDN) on DSN. Essentially, a CDN is a tool that caches content near users and reduces latency when retrieving content.

We believe this is the next key to the widespread adoption of DSN, as it can enable fast video loading (like a distributed Netflix, YouTube, TikTok). Glitter, one of our portfolio companies, represents this field, focusing on DSN indexing. It is critical infrastructure that can improve the efficiency of the retrieval market and bring richer use cases.

Such products have already shown a high degree of product-market fit, with significant demand in Web 2. Nevertheless, many products still face some friction, and the permissionless nature of Web 3 may become their salvation.

The Significance of Composability

In fact, we believe that excellent opportunities in the DSN field are right in front of us. In these two articles by Jnthnvctr.eth, he discusses how the market is developing and some upcoming products (using Filecoin as an example):

One of the most interesting points is the potential for combining off-chain computation with storage and on-chain computation. This is because providing storage resources inherently requires computational power. This natural combination can increase commercial activity within DSN while opening up new use cases.

The launch of FEVM has made many new attempts possible and has brought fun and competition to the storage field. Entrepreneurs looking to create new products can check the resource library to see all the products that Protocol Labs hopes people will build, with the possibility of earning rewards.

Web 2 has revealed the gravity of data; companies that collect/create large amounts of data can reap rewards, and they will close off data to protect their own interests.

If our ideal user-controlled data solutions become mainstream, the scenarios for value accumulation will change. Users become the primary beneficiaries, exchanging data for cash flow, and monetization tools that unlock this potential can benefit, leading to significant changes in how data is stored and accessed. This type of data can naturally be stored on DSN, which can profit from data through a robust query market. This is a shift from exploitation to liquidity.

There may be even more magical developments awaiting us.

When envisioning the future of distributed storage, consider how it interacts with future operating systems (like Urbit). Urbit is a personal server built using open-source software that allows users to participate in P2P networks. It is a truly distributed operating system that can self-host and interact with the internet in a P2P manner.

If the future unfolds as followers of Urbit hope, distributed storage solutions will undoubtedly become a key component of personal tech stacks. Users can encrypt and store all personal data on a DSN and coordinate actions through the Urbit operating system. Furthermore, we can expect further integration of distributed storage with Web 3 and Urbit, especially with projects like Uqbar Network, which can bring smart contracts into the Nook environment.

This is the power of composability, where slow accumulation ultimately leads to delightful results. From small beginnings to a revolution, it points to a way of existing in an ultra-connected world. While Urbit may not be the final answer to the problem (it has faced criticism), it shows us how these attempts can converge into a river leading to the future.