L2BEAT "cracked" LayerZero with an experiment: Why is "independent security" not secure?

Original Title: 《Circumventing Layer Zero: Why Isolated Security is No Security》

Author: Krzysztof Urbański, L2BEAT Team Member

Compiled by: Babywhale, Foresight News

Since its inception, L2BEAT has invested significant effort in analyzing and understanding the risks associated with Layer 2 protocols. We always start from the perspective of the greatest benefit to users and the ecosystem, striving to be a fair and independent overseer, without allowing our personal preferences for projects or related teams to influence the facts. This is why, even though we respect the time and effort that project teams invest in their projects, we will still "sound the alarm" about potential risks that certain protocols may pose or express our concerns. Early discussions related to security can better prepare the entire ecosystem for potential risks and allow for quicker responses to any suspicious behavior.

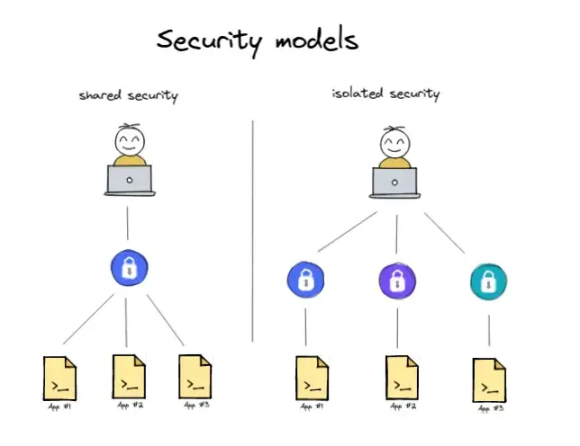

Today, we want to discuss the shared security model of cross-chain applications. Currently, there are two security models: shared security and independent application security. Shared security applies to all Rollups, while independent application security is primarily used by "omnichain" projects, which mainly utilize LayerZero.

Shared Security vs. Independent Security

Shared security refers to specific tokens or applications running on a given infrastructure, which must adhere to any security requirements imposed by the infrastructure rather than freely choosing their security model. For example, Optimistic Rollups typically impose a 7-day finality window—applications running on such Rollups cannot simply ignore or shorten this period. While this may seem like an obstacle, it is for a reason. This period provides users with security assurances, and regardless of the internal security policies of the application, this security policy must be adhered to; the application may only enhance the security of the Rollups without undermining it.

Independent security means that each application is responsible for defining its own security, without any restrictions imposed by the infrastructure. At first glance, this seems like a good idea, as application developers are most familiar with the security measures their applications may need. However, at the same time, it shifts the responsibility for assessing the risks associated with each application's security policy to the end users. Moreover, if application developers can freely choose their security policies, they can also change them at any time. Therefore, assessing the risks of each application once is not sufficient; it should be reassessed every time the application's policies change.

Existing Issues

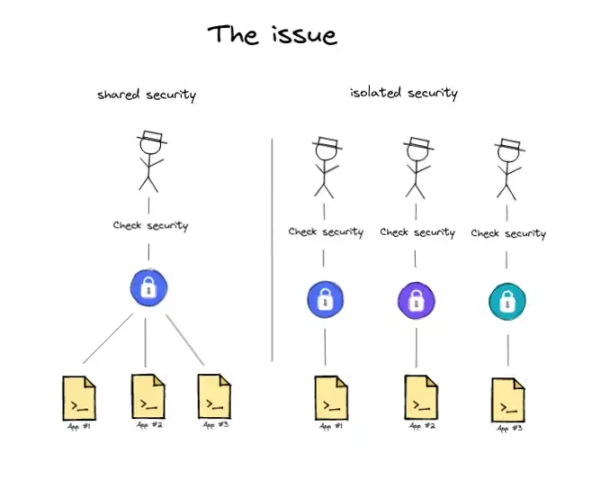

We believe that allowing each application to freely define its security policy in an independent security model poses serious security issues. First, it increases the risk for end users, as they must verify the risks of each application they intend to use.

Independent security also increases the risks for applications using this model, such as the additional risks associated with changes to security policies—if an attacker wants to change the application's security model, it is easier to simply disable it, thereby draining funds or attacking in any other way. There is no additional security layer above the application to prevent it from being attacked.

Furthermore, since security policies can be changed at any time and instantaneously, it is nearly impossible to monitor applications in real-time and inform users of the risks.

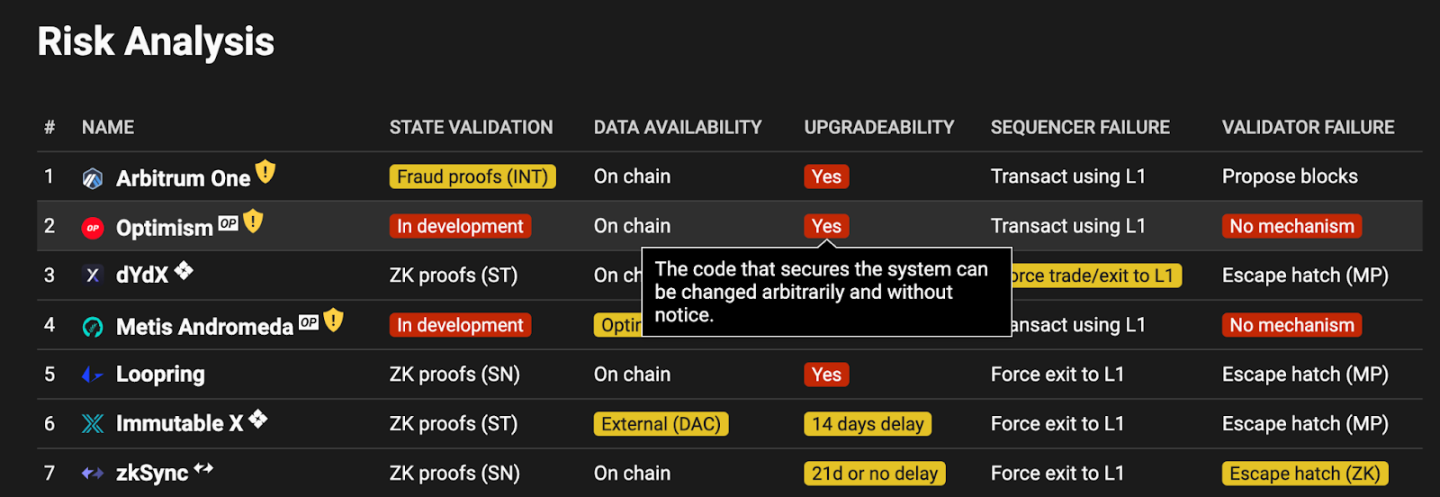

We find this similar to the upgradability of smart contracts, and we have already issued a warning on L2BEAT. We inform users about Rollups and cross-chain bridges that have upgradability mechanisms in their smart contracts, as well as the exact mechanisms for managing upgradability in each case. This is already quite complex, and using an independent security model further multiplies the number of variables, making it nearly impossible to track effectively.

This is why we believe that independent security models themselves pose a security risk, and we assume that every application using this model by default should be considered risky unless proven otherwise.

Proving Security Vulnerabilities Exist

We decided to test our hypothesis on the mainnet. The choice of the LayerZero framework for the experiment was because it is one of the most popular solutions centered around independent security. We deployed a secure omnichain token and then updated the security configuration to allow malicious extraction of tokens. The token's codebase is based on the examples provided by LayerZero and is very similar or identical to many other omnichain tokens and applications that are actually deployed.

But before we delve into the details, let’s briefly understand what LayerZero's security model looks like.

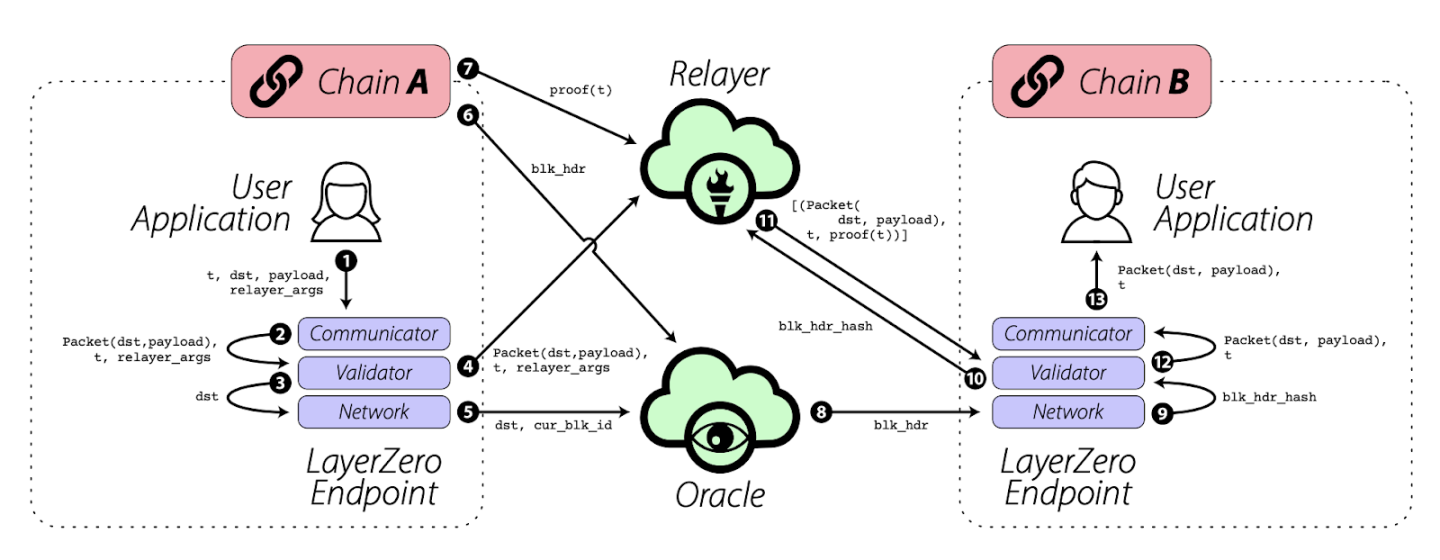

As LayerZero points out in its white paper, its "trustless inter-chain communication" relies on two independent participants (oracles and relayers) acting together to ensure the security of the protocol.

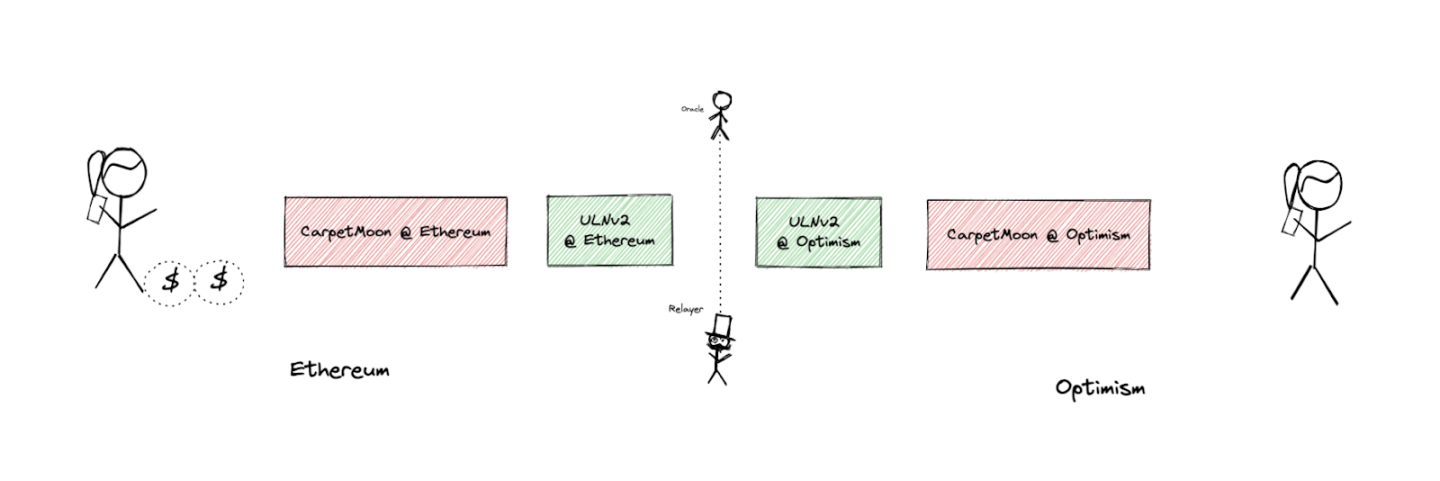

LayerZero states on its website that its core concept is "running ULN (UltraLightNode), a configurable on-chain terminal for user applications." The on-chain components of LayerZero rely on two external off-chain components to relay messages between chains—an oracle and a relayer.

Whenever any message M is sent from chain A to chain B, the following two operations occur:

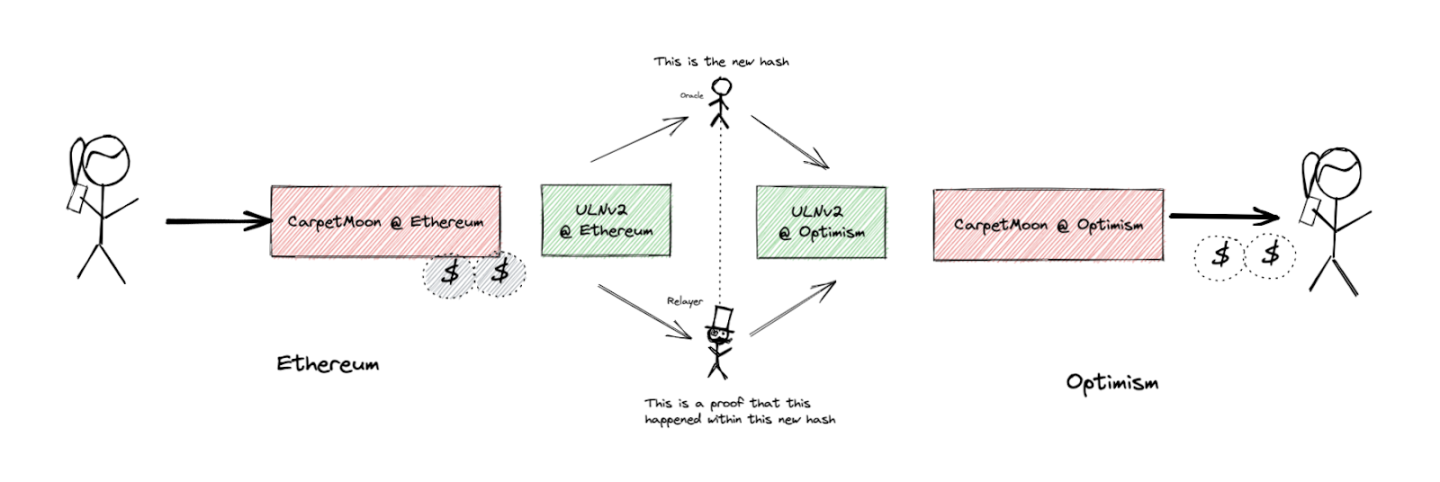

- First, the oracle waits for the transaction sending message M on chain A to be completed, and then writes relevant information on chain B, such as the hash of the block header containing message M on chain A (the exact format may vary between different chains/oracles).

- Then the relayer sends a "proof" (e.g., Merkle Proof) to chain B, proving that the stored block header contains message M.

LayerZero assumes that the relayer and oracle are independent, honest participants. However, LayerZero also states in its white paper that if this assumption is not met—for example, if the relayer and oracle collude, resulting in "the block header provided by the oracle and the transaction proof provided by the relayer being invalid but still matching."

LayerZero claims that "the design of LayerZero eliminates the possibility of collusion." But in fact, this statement is incorrect (as we demonstrate in the experiment below), because each user application can define its own relayer and oracle. LayerZero does not guarantee through design that these components are independent and cannot collude; rather, it is the user application that provides these guarantees. If the application chooses to undermine them, LayerZero has no mechanism to prevent it.

Moreover, by default, all user applications can change the relayer and oracle at any time, completely redefining the security assumptions. Therefore, simply checking the security of a given application once is not sufficient, as it may change at any time after the check, as we will demonstrate in the experiment.

Experimental Design

In our experiment, we decided to create a simple omnichain token called CarpetMoon, running on both Ethereum and Optimism, and using LayerZero for communication between the two chains.

Our token initially used the default security model provided by LayerZero, making it identical to a large (but not all) number of currently deployed LayerZero applications. Therefore, it is generally as secure as any other token using LayerZero.

First, we deployed our token contract on Ethereum and Optimism:

https://ethtx.info/mainnet/0xf4d1cdabb6927c363bb30e7e65febad8b9c0f6f76f1984cd74c7f364e3ab7ca9/

Then we set up routing so that LayerZero knows which contract corresponds to which on the two chains.

https://ethtx.info/mainnet/0x19d78abb03179969d6404a7bd503148b4ac14d711f503752495339c96a7776e9/

The token has been deployed, and it looks exactly like all other omnichain tokens using LayerZero, with the default configuration and no suspicious elements.

We provided our "test user" Alice with 1 billion CarpetMoon tokens on Ethereum.

https://ethtx.info/mainnet/0x7e2faa8426dacae92830efbf356ca2da760833eca28e652ff9261fc03042b313/

Now Alice uses LayerZero to cross-chain these tokens to Optimism.

We lock the tokens in a custody contract on Ethereum:

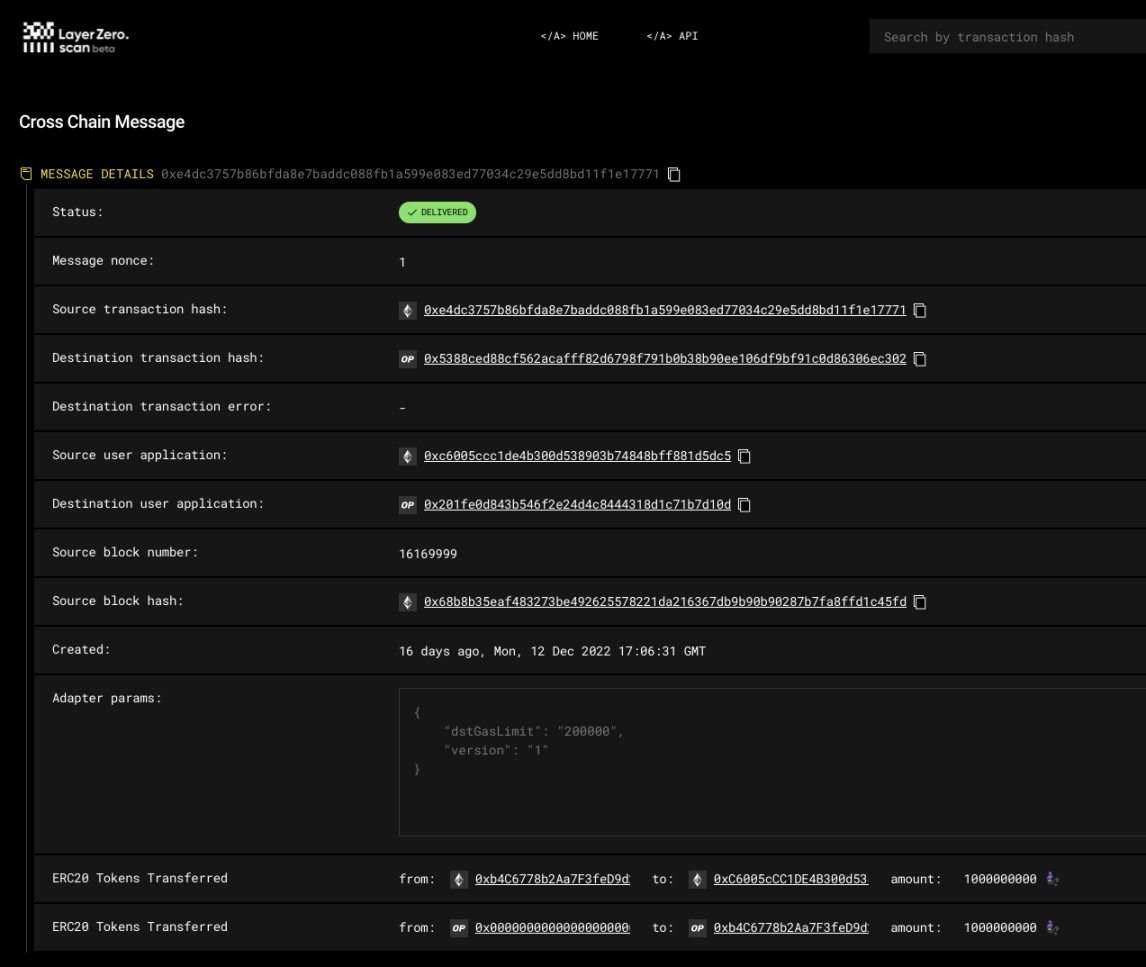

https://ethtx.info/mainnet/0xe4dc3757b86bfda8e7baddc088fb1a599e083ed77034c29e5dd8bd11f1e17771/.

The message containing the transaction is being relayed to Optimism via LayerZero:

The cross-chain tokens are minted on Optimism, and Alice now has 1 billion MoonCarpet tokens on Optimism:

Everything is going as expected; Alice has cross-chained the tokens and sees that there are 1 billion MoonCarpet tokens in the custody contract on Ethereum, and she has 1 billion MoonCarpet tokens in her account on Optimism. But to ensure everything is working correctly, she transfers half of the tokens back to Ethereum.

We start with the transaction to burn 500 million tokens on Optimism:

Information about this transaction is relayed to Ethereum:

As expected, 500 million MoonCarpet tokens are returned from the custody contract to Alice's address:

https://etherscan.io/tx/0x27702e07a65a9c6a7d1917222799ddb13bb3d05159d33bbeff2ca1ed414f6a18.

So far, everything is normal and completely consistent with the assumptions. Alice has checked that she can cross-chain the tokens from Ethereum to Optimism and back again, and she has no reason to worry about her MoonCarpet tokens.

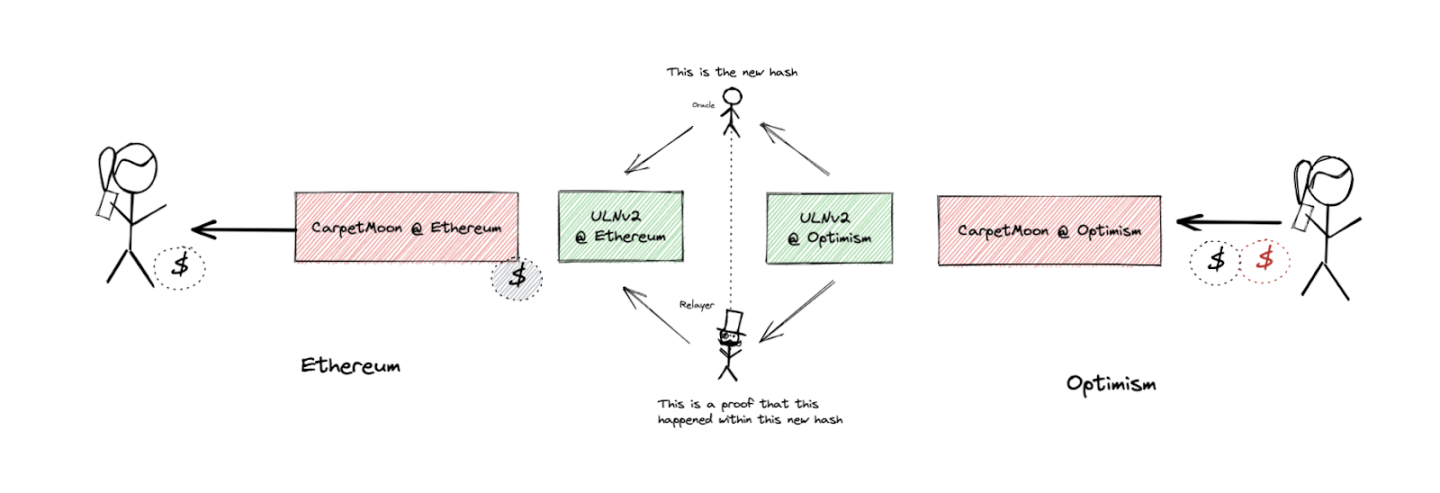

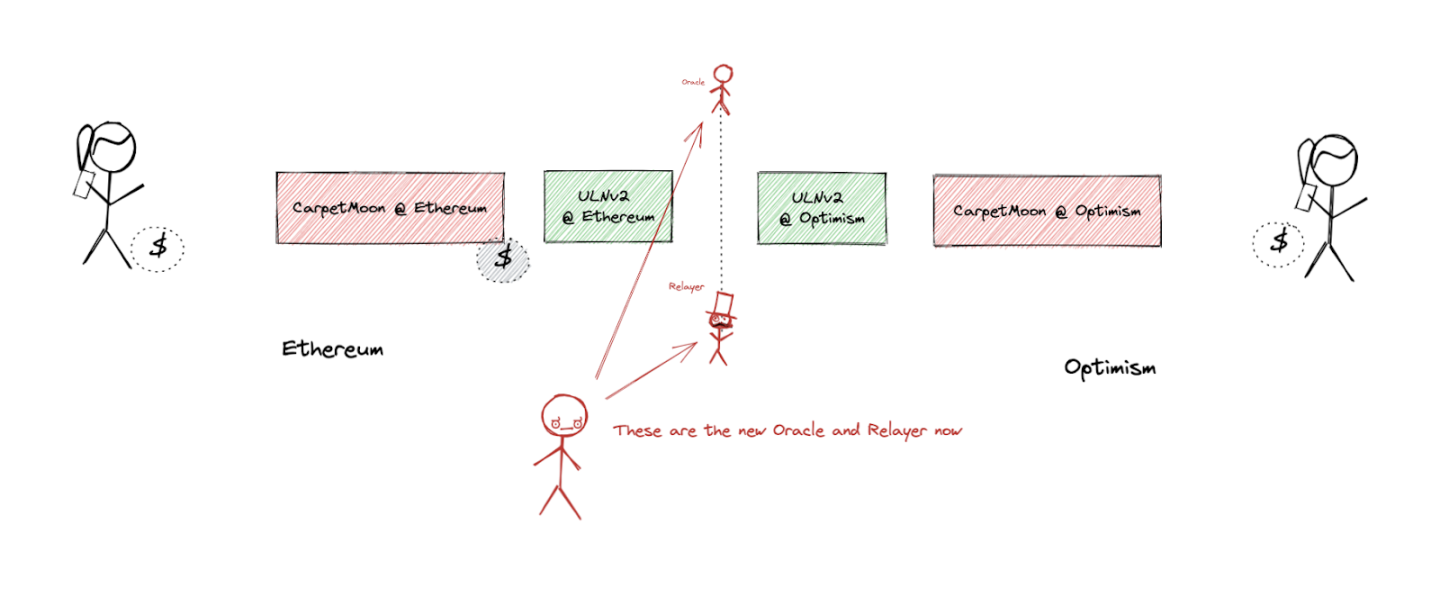

But there is a problem with the assumption itself—what if the team behind our token encounters issues, and the bad actor Bob gains access to our application’s LayerZero configuration?

In this way, Bob can change the oracle and relayer from the default components to those under his control.

It is important to note that this is a mechanism provided for every application using LayerZero, rooted in LayerZero's architecture; it is not any type of backdoor but a standard mechanism.

So Bob changes the oracle to an EOA under his control:

https://ethtx.info/mainnet/0x4dc84726da6ca7d750eef3d33710b5f63bf73cbe03746f88dd8375c3f4672f2f/.

The relayer is also changed similarly:

https://ethtx.info/mainnet/0xc1d7ba5032af2817e95ee943018393622bf54eb87e6ff414136f5f7c48c6d19a/.

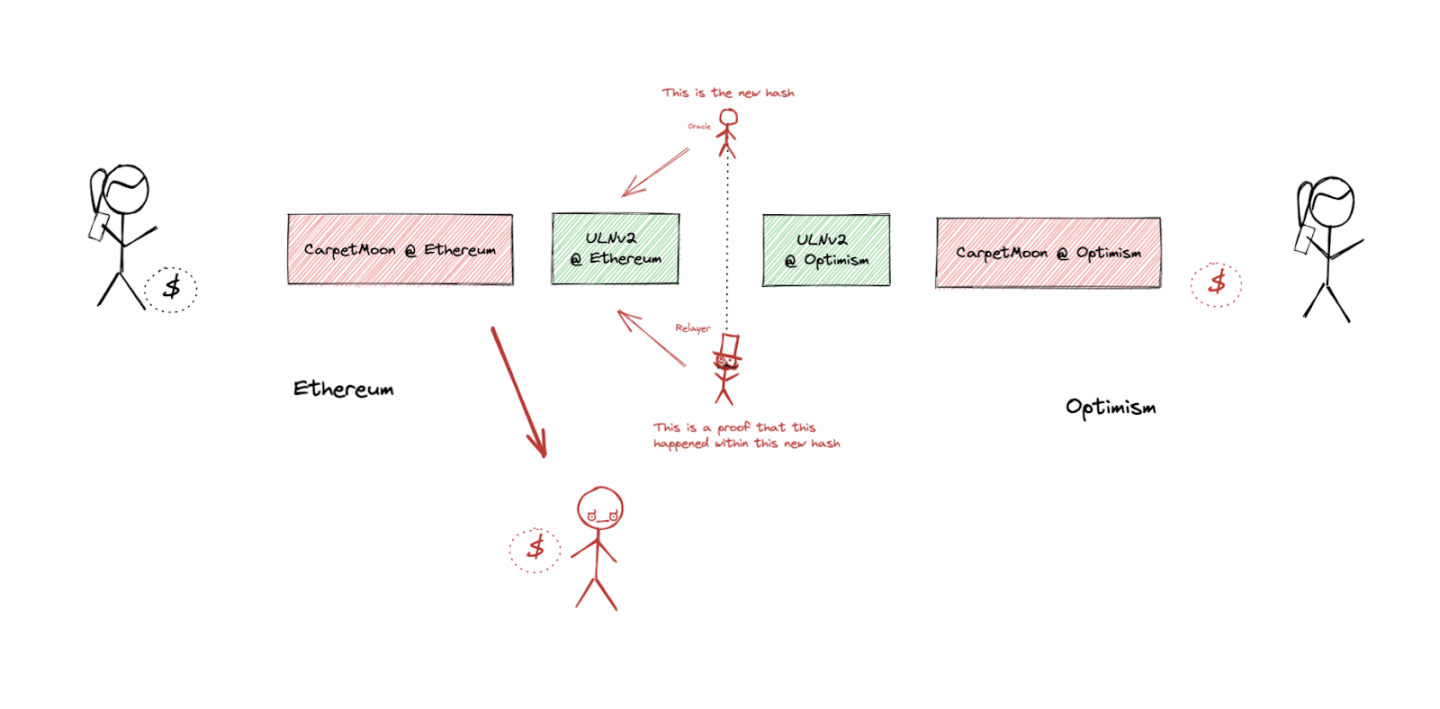

Now strange things happen. Since the oracle and relayer are now completely under Bob's control, he is able to steal Alice's tokens. Even without taking any action on Optimism (the MoonCarpet tokens are still in Alice's wallet), Bob can convince the MoonCarpet smart contract on Ethereum (using LayerZero mechanisms) that he has destroyed the tokens on another chain and that he can withdraw the MoonCarpet tokens on Ethereum.

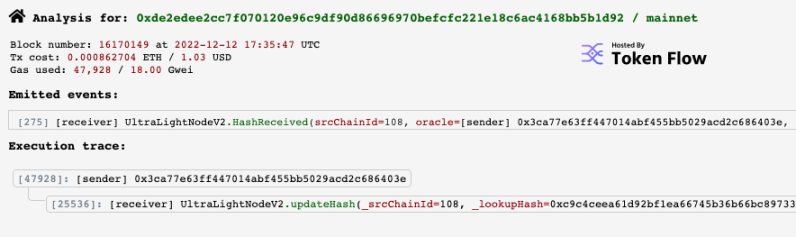

First, he updates the block hash on Ethereum using the oracle he controls:

https://ethtx.info/0xde2edee2cc7f070120e96c9df90d86696970befcfc221e18c6ac4168bb5b1d92/.

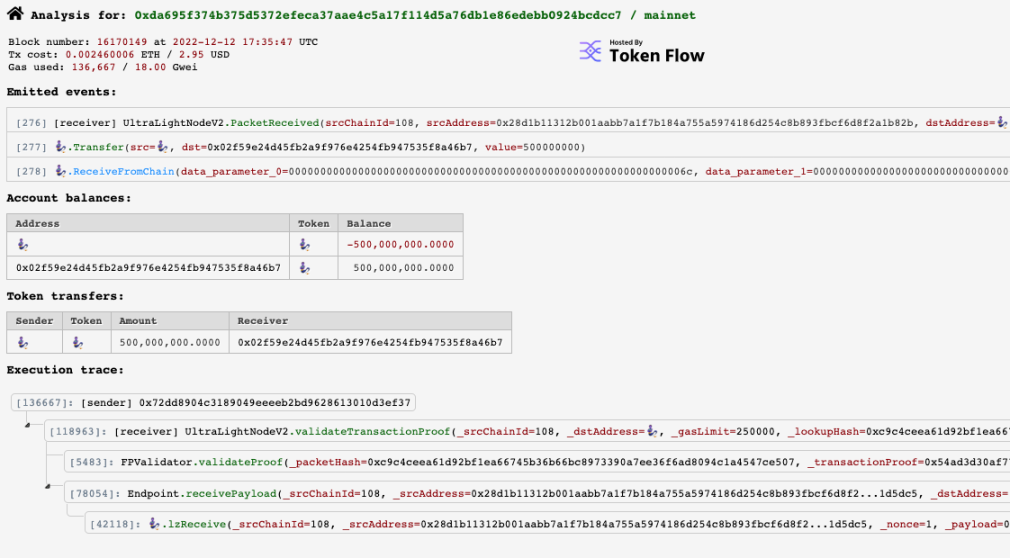

Now he can withdraw the remaining tokens from the custody contract:

https://ethtx.info/0xda695f374b375d5372efeca37aae4c5a17f114d5a76db1e86edebb0924bcdcc7/.

Experimental Results

Alice has no idea why or when the error occurred. Suddenly, her MoonCarpet tokens on Optimism are no longer backed by the tokens on Ethereum.

The smart contract is not upgradable and operates as expected. The only suspicious activity is the change in the oracle and relayer, but this is a routine mechanism built into LayerZero, so Alice is unaware whether this change was intentional. Even if Alice were to learn of this change, it would be too late—attackers could drain funds before she could react.

LayerZero is also powerless—these are valid executions of their mechanisms, and they cannot exert control. In theory, the application itself could prevent itself from changing the oracle and relayer, but to our knowledge, no deployed applications have done so.

We conducted this experiment to test whether anyone would notice it, but as we expected, no one did. Effectively monitoring all applications built using LayerZero to check if their security policies have changed and warning users when this occurs is nearly impossible.

Even if someone were able to timely detect that the oracle and relayer had changed and posed a security risk, it would be too late. The new oracle and relayer can now freely choose the messages to relay or simply disable inter-chain communication, leaving users generally powerless. Our experiment clearly demonstrates that even if Alice notices the change in application configuration, she cannot do much with her cross-chain tokens—the new oracle and relayer no longer accept messages on the original communication chain, so they will not relay messages back to Ethereum.

Conclusion

As we have seen, even though our token was built using LayerZero and operated as expected using its mechanisms, we were still able to steal funds from the token's custody. Of course, this is the fault of the application (in our case, the CarpetMoon token) rather than LayerZero itself, but it proves that LayerZero does not provide any security guarantees.

When LayerZero describes their security model regarding oracles and relayers, they assume that application owners (or those with private keys) will not do anything unreasonable. But in a hostile environment, this assumption is incorrect. Furthermore, it requires users to trust application developers as a trusted third party.

Therefore, in practice, no assumptions can be made about the security of applications built using LayerZero—each application should be considered risky until proven otherwise.

In reality, the whole story began with our plan to include all omnichain tokens on the L2BEAT website—we struggled to figure out how to assess their risks. In analyzing the risks, we came up with the idea for the experiment.

If it goes live on L2BEAT, the consequence is that we would have to place alerts over every application built using LayerZero, warning of potential security risks. But we want to engage in a broader discussion about security models because we believe that independent security is a pattern that should be avoided, especially in our field.

We believe that as independent security models like LayerZero become more popular, more projects will misuse them, causing significant damage and increasing uncertainty across the industry.