In-depth Analysis of IPFS: The Next Generation Internet Underlying Protocol

Author: Xiang, W3.Hitchhiker

What is IPFS

Powering the decentralized internet (web3.0).

A peer-to-peer hypermedia protocol that preserves and develops human knowledge in a scalable, resilient, and more open way.

IPFS is a distributed system for storing and accessing files, websites, applications, and data.

HTTP

"IPFS" corresponds to something called "HTTP," which you may be more familiar with. When you go online and open the Baidu search page, it is what you see.

The application layer protocol of the web is the Hypertext Transfer Protocol (HTTP), which is the core of the traditional web. HTTP is implemented by two programs: a client program and a server program. The client and server programs run on different end systems and communicate through HTTP sessions. HTTP defines the structure of the data and how the client and server interact.

Web pages are composed of objects, and an object is simply a file, such as an HTML file, a JPEG image, or a small video file, which can be addressed via a URL. Most web pages contain a basic HTML file and several referenced objects.

HTTP defines how web clients request web pages from web servers and how servers deliver web pages to clients.

The browser's job is to execute and parse the HTTP protocol and front-end code, then display the content. When submitting a query, it typically queries its database on the web and returns the results to the requester, which is the browser, and then the browser displays it.

Disadvantages of the HTTP Protocol

We currently use the internet under the HTTP or HTTPS protocol. The HTTP protocol, which is the Hypertext Transfer Protocol, is used to transfer hypertext from web servers to local browsers. Since its inception in 1990, it has been 32 years, and it has played a significant role in the explosive growth of the current internet, contributing to its prosperity.

However, the HTTP protocol is based on a client/server architecture and operates under a centralized backbone network mechanism, which has many drawbacks.

- Data on the internet is often permanently erased due to file deletions or server shutdowns. Some statistics show that the average lifespan of web pages on the internet is only about 100 days, and we often see "404 errors" on some websites.

- The backbone network operates inefficiently and incurs high costs. Using the HTTP protocol requires downloading the entire file from a centralized server each time, which is slow and inefficient.

- The concurrent mechanism of the backbone network limits internet access speed. This centralized backbone network model also leads to congestion during high concurrency situations.

- Under the existing HTTP protocol, all data is stored on these centralized servers. Internet giants have absolute control and interpretive power over our data, and various forms of regulation, blocking, and monitoring significantly restrict innovation and development.

- High costs and vulnerability to attacks. To support the HTTP protocol, large traffic companies like Baidu, Tencent, and Alibaba invest substantial resources to maintain servers and security measures to prevent DDoS attacks. The backbone network is susceptible to interruptions due to wars, natural disasters, server outages, and other factors.

IPFS Solutions

- IPFS provides a historical version rollback feature for files, allowing easy access to historical versions, and data cannot be deleted, ensuring permanent preservation.

- IPFS is based on a content-addressed storage model, meaning identical files are not stored redundantly. It compresses excess resources, including releasing storage space, thereby reducing data storage costs. If a P2P method is used for downloads, bandwidth costs can be reduced by nearly 60%.

- IPFS is based on a P2P network, allowing multiple sources to store data and enabling concurrent downloads from multiple nodes.

- Built on a decentralized distributed network, IPFS is difficult to manage and restrict centrally, making the internet more open.

- IPFS distributed storage can significantly reduce dependence on centralized backbone networks.

In summary:

HTTP relies on centralized servers, making it vulnerable to attacks, prone to server crashes during high traffic, slow download speeds, and high storage costs;

Whereas IPFS uses distributed nodes, making it more secure and less susceptible to DDoS attacks, does not rely on the backbone network, reduces storage costs while providing ample storage space, offers fast download speeds, allows for historical version tracking, and theoretically enables permanent storage.

New technologies replace old technologies based on two points:

First, they can improve system efficiency;

Second, they can reduce system costs.

IPFS achieves both.

The IPFS team adopted a highly modular and integrated approach during development, building the entire project like stacking blocks. The Protocol Labs team was established in 2015 and spent two years developing three modules: IPLD, LibP2P, and Multiformats, which serve the underlying IPFS.

Multiformats is a collection of hash encryption algorithms and self-describing methods (from the value, you can know how the value is generated), featuring six mainstream encryption methods including SHA1, SHA256, SHA512, and Blake3B, used for encrypting and describing node IDs and fingerprint data generation.

LibP2P is the core of IPFS, helping developers quickly establish a usable P2P network layer in the face of various transport layer protocols and complex network devices, which is why IPFS technology is favored by many blockchain projects.

IPLD is essentially a conversion middleware that unifies existing heterogeneous data structures into a single format, facilitating data exchange and interoperability between different systems. Currently, IPLD supports data structures such as Bitcoin and Ethereum block data, as well as IPFS and IPLD. This is also why IPFS is popular among blockchain systems; its IPLD middleware can unify different block structures into a standard for transmission, providing developers with a higher success rate standard without worrying about performance, stability, and bugs.

Benefits of IPFS

- A hypermedia distribution protocol that integrates concepts from Kademlia, BitTorrent, and Git.

- A completely decentralized peer-to-peer transfer network that avoids central node failures and censorship.

- Driving into the future of the internet ------ new browsers already support the IPFS protocol by default (Brave, Opera), and traditional browsers can access files stored in the IPFS network through public IPFS gateways like

https://ipfs.io, or by installing the IPFS Companion extension. - Next-generation content delivery network (CDN) ------ just by adding files to local nodes, files can be obtained globally through cache-friendly content hash addresses and BitTorrent-like network bandwidth distribution.

- Backed by a strong open-source community, providing a developer toolkit for building complete distributed applications and services.

IPFS defines how files are stored, indexed, and transmitted in the system, converting uploaded files into a specialized data format for storage. At the same time, IPFS calculates the hash of identical files to determine their unique addresses. Therefore, regardless of the device or location, the same file will point to the same address (unlike URLs, this address is native and guaranteed by encryption algorithms; you cannot change it, nor do you need to). Then, it connects all devices in the network through a file system, allowing files stored in the IPFS system to be quickly retrieved from anywhere in the world, unaffected by firewalls (no need for network proxies). Fundamentally, IPFS can change the distribution mechanism of WEB content, achieving decentralization.

How IPFS Works

IPFS is a peer-to-peer (P2P) storage network. Content can be accessed through nodes located anywhere in the world, which may relay information, store information, or both. IPFS knows how to find the content you request using its content address rather than its location.

Understanding the three basic principles of IPFS:

- Unique identification through content addressing

- Content linking via Directed Acyclic Graph (DAG)

- Content discovery through Distributed Hash Table (DHT)

These three principles are interdependent and form the IPFS ecosystem. Let's start with content addressing and unique identification of content.

Content Addressing and Unique Identification of Content

IPFS uses content addressing to identify content based on its content rather than its location. Finding items by content is what everyone has always done.

For example, when you look for a book in a library, you often search by title; that is content addressing because you are asking what it is.

If you were to use location addressing to find that book, you would look for it by its location: "I want the book on the second floor, third shelf, fourth from the left."

If someone moved that book, you would be out of luck!

This problem exists on the internet and your computer! Currently, content is found by location, such as:

https://en.wikipedia.org/wiki/Aardvark/Users/Alice/Documents/term_paper.docC:\Users\Joe\My Documents\project_sprint_presentation.ppt

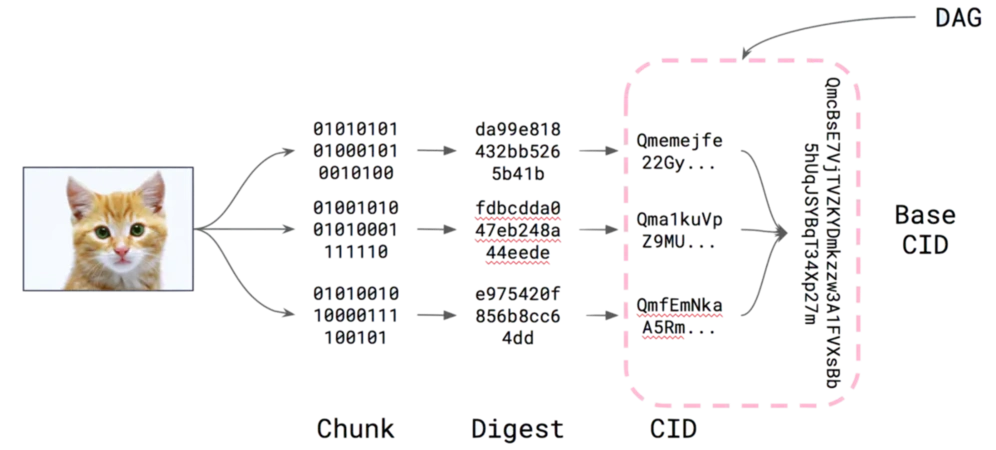

In contrast, every piece of content using the IPFS protocol has a Content Identifier, or CID. The hash is unique to the content it comes from, even if it may appear short compared to the original content.

Many distributed systems use hashes for content addressing, not only to identify content but also to link it together—from supporting code commits to running cryptocurrencies on blockchains, everything utilizes this strategy. However, the underlying data structures in these systems may not be interoperable.

CID (Content Identifiers)

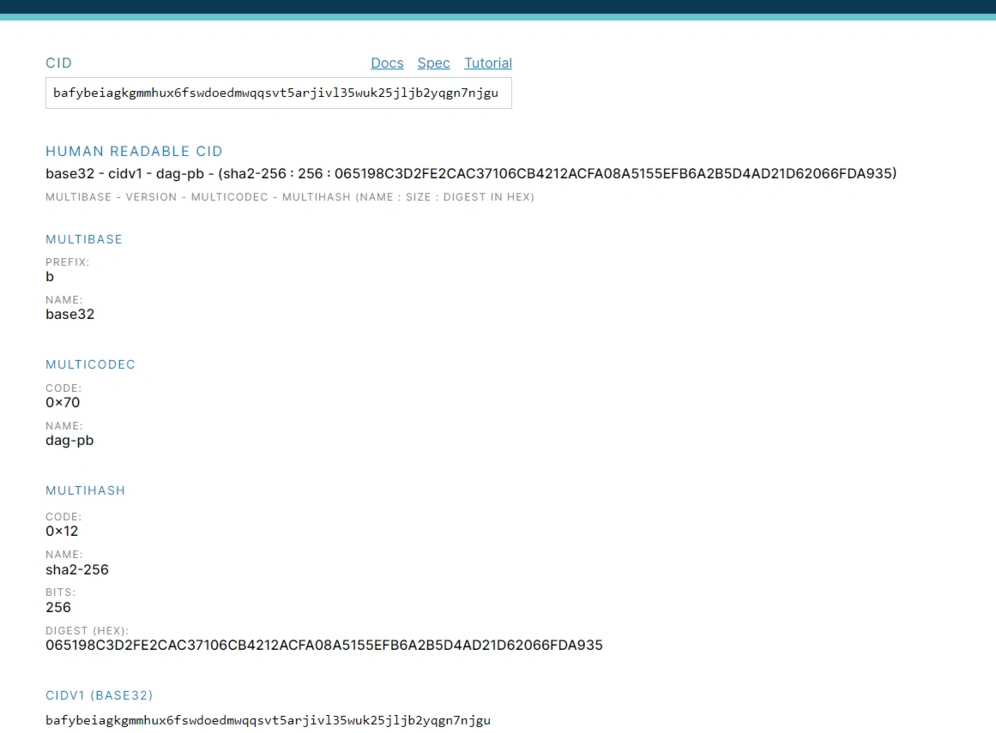

The CID specification originated from IPFS and now exists in multiple formats, supporting a wide range of projects including IPFS, IPLD, libp2p, and Filecoin. Although we will share some IPFS examples throughout the course, this tutorial focuses on the analysis of CID itself, which serves as the core identifier for referencing content in every distributed information system.

A content identifier or CID is a self-describing content-addressing identifier. It does not indicate where the content is stored but forms an address based on the content itself. The number of characters in a CID depends on the cryptographic hash of the underlying content, not the size of the content itself. Since most content in IPFS uses the hash sha2-256, most CIDs you encounter will be of the same size (256 bits, equivalent to 32 bytes). This makes them easier to manage, especially when dealing with multiple pieces of content.

For example, if we store an image of an aardvark on the IPFS network, its CID would look like this: QmcRD4wkPPi6dig81r5sLj9Zm1gDCL4zgpEj9CfuRrGbzF.

Previously demonstrated Uniswap's IPFS link:

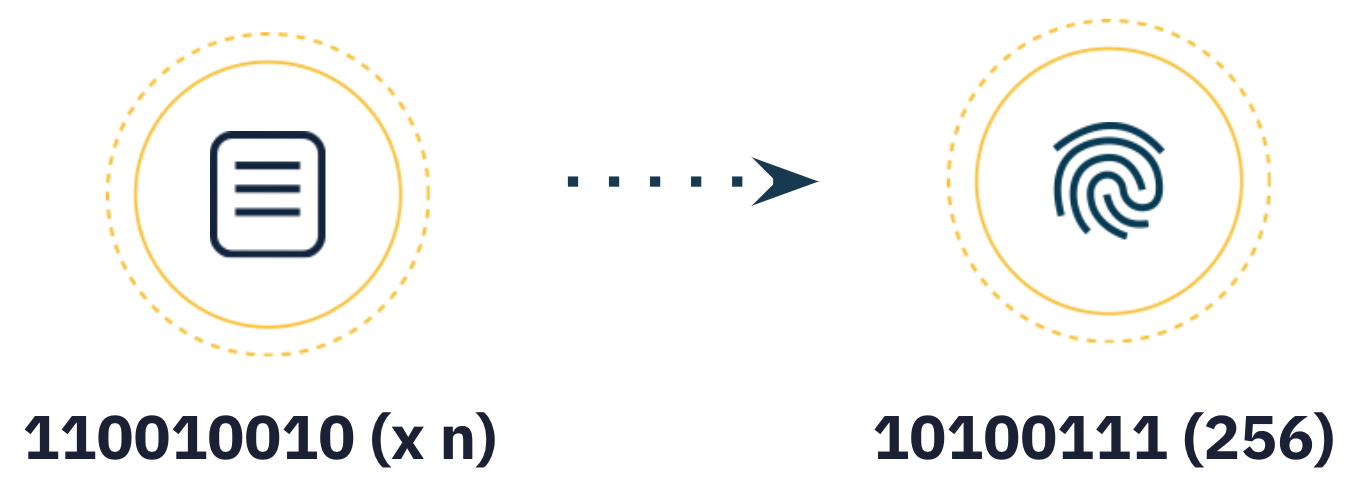

The first step in creating a CID is to convert the input data, using a cryptographic algorithm to map input (data or files) of any size to a fixed-size output. This conversion is called a hash digital fingerprint or simply a hash (defaulting to sha2-256).

The cryptographic algorithm used must generate hashes with the following characteristics:

- Deterministic: The same input should always produce the same hash.

- Non-correlated: A small change in the input data should produce a completely different hash.

- One-way: It is infeasible to reverse-engineer the input data from the hash value.

- Uniqueness: Only one file can produce a specific hash.

Note that if we change a single pixel in the aardvark image, the cryptographic algorithm will generate a completely different hash for the image.

When we retrieve data using a content address, we can be assured of seeing the expected version of that data. This is completely different from location addressing on the traditional web, where the content at a given address (URL) can change over time.

Structure of CID

Multiformats

Multiformats is primarily responsible for identity encryption and self-description of data within the IPFS ecosystem.

Multiformats is a collection of protocols for future secure systems, and self-describing formats allow systems to cooperate and upgrade with each other.

The Multiformats protocol includes the following protocols:

multihash - self-describing hash

multiaddr - self-describing network address

multibase - self-describing base encoding

multicodec - self-describing serialization

multistream - self-describing stream network protocol

multigram (WIP) - self-describing packet network protocol

Content Linking Directed Acyclic Graph (DAG)

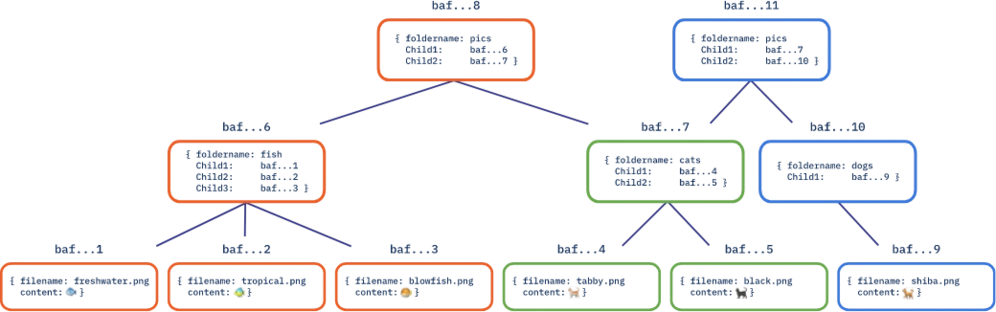

Merkle DAG inherits the allocability of CID. Using content addressing for DAGs has some interesting implications for their distribution. First, of course, anyone who has a DAG can act as a provider for that DAG. The second is that when we retrieve data encoded as a DAG, such as a file directory, we can take advantage of the fact that we can retrieve all child nodes of the nodes in parallel, potentially from many different providers!

Third, file servers are not limited to centralized data centers, allowing our data to have a broader reach. Finally, because each node in the DAG has its own CID, the DAG it represents can be shared and retrieved independently of any DAG it is embedded in.

Verifiability

Have you ever backed up files and then found those two files or directories months later and wondered if their contents were the same? You can calculate a Merkle DAG for each backup without the hassle of comparing files: if the CID of the root directory matches, you know which ones can be safely deleted, freeing up some space on your hard drive!

Allocability

For example, distributing large data. On traditional web networks:

- Developers sharing files are responsible for maintaining servers and their associated costs.

- The same server is likely used to respond to requests from around the world.

- Data itself can be archived as a single file and distributed in a monolithic manner.

- It is difficult to find alternative providers for the same data.

- Data may be large chunks that must be downloaded serially from a single provider.

- Others find it difficult to share data.

Merkle DAG helps us alleviate all these issues. By converting data into content-addressed DAGs:

- Anyone who wants to can help send and receive files.

- Nodes from around the world can participate in serving data.

- Each part of the DAG has its own CID and can be distributed independently.

- It is easy to find alternative providers for the same data.

- The nodes that make up the DAG are small and can be downloaded in parallel from many different providers.

All of this contributes to the scalability of important data.

Deduplication

For example, consider browsing the web! When a person uses a browser to access a web page, the browser must first download resources related to that page, including images, text, and styles. Many web pages actually look very similar, just using the same theme with slight variations. This creates a lot of redundancy.

When the browser is optimized enough, it can avoid downloading the same component multiple times. Whenever a user visits a new website, the browser only needs to download the nodes corresponding to different parts in its DAG, while previously downloaded parts do not need to be downloaded again! (Think of WordPress themes, Bootstrap CSS libraries, or common JavaScript libraries.)

Distributed Hash Table (DHT)

A Distributed Hash Table (DHT) is a distributed system for mapping keys to values. In IPFS, DHT serves as a fundamental component of the content routing system and acts as a crossroad between directory and navigation systems. It maps the content users are looking for to peer nodes that store the matching content. You can think of it as a huge table that stores who has what data.

Libp2p

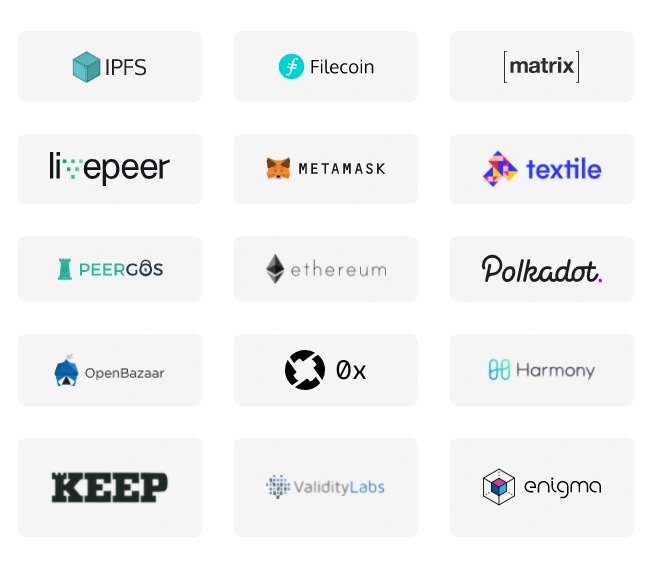

libp2p is a modular network stack that evolved from IPFS into a standalone project. It is also used by Polkadot and partially by eth2.0.

To explain why libp2p is such an important part of decentralized networks, we need to step back and understand its origins. The initial implementation of libp2p began with IPFS, a peer-to-peer file-sharing system. Let's start by exploring the network problems that IPFS aims to solve.

Network

Networks are very complex systems with their own rules and limitations, so when designing these systems, we need to consider many situations and use cases:

- Firewalls: Your laptop may have a firewall installed that blocks or restricts certain connections.

- NAT: Your home WiFi router, with NAT (Network Address Translation), converts your laptop's local IP address into a single IP address that can connect to the outside network.

- High-latency networks: These networks have very slow connection speeds, making users wait a long time to see their content.

- Reliability: Many networks are distributed around the world, and many users frequently encounter slow networks that lack robust systems to provide good connections. Connections frequently drop, and the quality of users' network systems is poor, failing to provide the service users deserve.

- Roaming: Mobile addressing is another situation where we need to ensure that users' devices maintain unique discoverability while navigating through different networks around the world. Currently, they work in distributed systems that require a lot of coordination points and connections, but the best solution is decentralized.

- Censorship: In the current state of the network, if you are a government entity, it is relatively easy to block websites in specific domain names. This is useful for preventing illegal activities, but it becomes a problem when an authoritarian regime wants to deprive its population of access to resources.

- Runtime with different attributes: There are many types of runtimes around, such as Internet of Things (IoT) devices (Raspberry Pi, Arduino, etc.), which are gaining significant adoption. Because they are built with limited resources, their runtimes often use different protocols that make many assumptions about their runtimes.

- Innovation is very slow: Even the most successful companies with abundant resources may take decades to develop and deploy new protocols.

- Data privacy: Consumers are increasingly concerned about companies that do not respect user privacy.

Current Issues with P2P Protocols

Peer-to-peer (P2P) networks were envisioned as a way to create resilient networks that can continue to function even if peer nodes disconnect from the network due to significant natural or man-made disasters, allowing people to continue communicating.

P2P networks can be used for various use cases, from video calls (like Skype) to file sharing (like IPFS, Gnutella, KaZaA, eMule, and BitTorrent).

Basic Concepts

Peer - A participant in a decentralized network. Peer nodes are participants with equal privileges and capabilities in the application. In IPFS, when you load the IPFS desktop application on your laptop, your device becomes a peer node in the decentralized IPFS network.

Peer-to-Peer (P2P) - A decentralized network where the workload is shared among peer nodes. Therefore, in IPFS, each peer node may host all or part of the files to be shared with other peer nodes. When a node requests a file, any node that has those file blocks can participate in sending the requested file. The requesting node can later share the data with other nodes.

IPFS looks for inspiration in current and past network applications and research to improve its P2P system. The academic community has produced a wealth of scientific papers offering ideas on how to solve some of these problems, but while research has yielded preliminary results, it lacks usable and adjustable code implementations.

Existing P2P system code implementations are often hard to find, and where they do exist, they are usually difficult to reuse or repurpose for the following reasons:

- Poor or nonexistent documentation

- Restrictive licenses or inability to find licenses

- Very old code last updated over a decade ago

- No contact points (no maintainers to reach out to)

- Closed-source (private) code

- Deprecated products

- No specifications provided

- No user-friendly APIs exposed

- Implementations are too tightly coupled to specific use cases

- Inability to use future protocol upgrades

There must be a better way. Seeing that the main issue is interoperability, the IPFS team envisioned a better way to integrate all current solutions and provide a platform that fosters innovation. A new modular system that allows future solutions to be seamlessly integrated into the network stack.

libp2p is the network stack of IPFS but has been extracted from IPFS to become a standalone first-class project and a dependency for IPFS.

In this way, libp2p can further develop independently of IPFS, gaining its own ecosystem and community. IPFS is just one of the many users of libp2p.

This way, each project can focus solely on its own goals:

IPFS focuses more on content addressing, which is finding, retrieving, and verifying any content on the network.

libp2p focuses more on process addressing, which is finding, connecting, and verifying any data transfer process on the network. So how does libp2p achieve this?

The answer is: modularity.

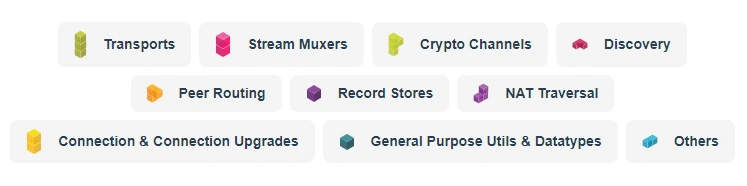

libp2p has identified specific parts that can make up the network stack:

With multi-language implementations supporting seven development languages, libp2p's JavaScript implementation also works in browsers and mobile browsers! This is crucial because it allows applications to run libp2p on both desktop and mobile devices.

Applications include file storage, video streaming, encrypted wallets, development tools, and blockchains. Many top blockchain projects have already adopted the libp2p module of IPFS.

IPLD

IPLD is used to understand and process data.

IPLD is a conversion middleware that unifies existing heterogeneous data structures into a single format, facilitating data exchange and interoperability between different systems, using CID as links.

First, we define a "data model" that describes the domain and scope of the data. This is important because it forms the foundation of everything we are going to build. (Broadly speaking, we can say that the data model "looks like JSON," like map, string, list, etc.) After that, we define "codecs," which describe how to parse it from messages and emit it in the form we want. IPLD has many codecs. You can choose to use different codecs based on the other applications you want to interact with or simply based on the performance and human readability suitability preferred by your own application.

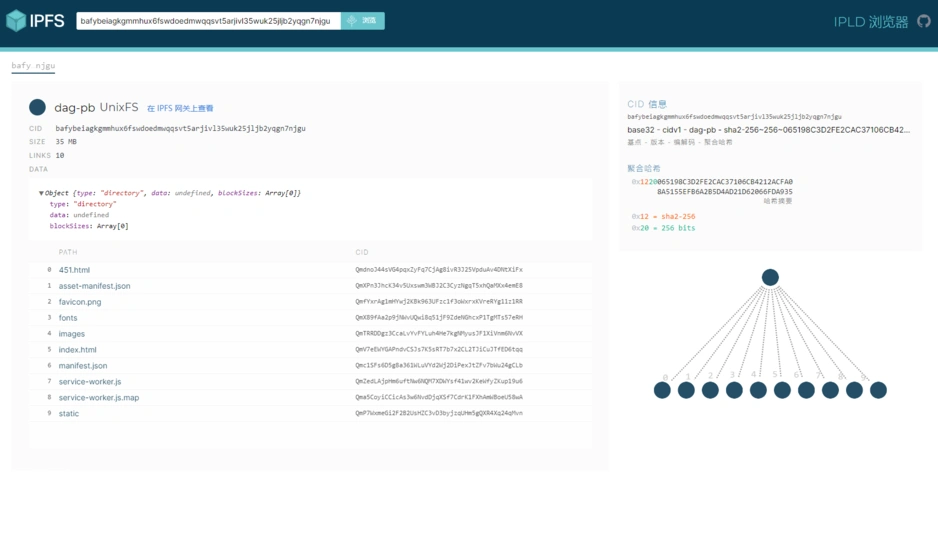

IPLD implements the top three layers of protocols: objects, files, naming.

- Object Layer - Data in IPFS is organized in a Merkle Directed Acyclic Graph (Merkle DAG) structure, where nodes are called objects and can contain data or links to other objects. Links are cryptographic hashes of the target data embedded in the source. These data structures provide many useful properties, such as content addressing, data integrity, deduplication, etc.;

- File Layer - To model a Git-like version control system on top of the Merkle DAG, IPFS defines the following objects:

- blob data block: a blob is a variable-sized data block (without links) representing a data chunk;

- list: used to organize blobs or other lists in order, typically representing a file;

- tree: represents directories and contains blobs, lists, and other trees;

- commit: similar to a Git commit, representing a snapshot in the version history of an object;

- Naming Layer - Since every change to an object alters its hash value, a mapping for the hash value is needed. IPNS (InterPlanetary Naming System) assigns a mutable namespace to each user and allows objects to be published to paths signed by the user's private key to verify the authenticity of the objects. Similar to URLs.

Display of IPLD:

IPFS applies the functions of the above modules, integrating them into a containerized application that runs on independent nodes, available for everyone to use and access. IPFS allows participants in the network to store, request, and transmit verifiable data to each other. However, since IPFS is open-source, it can be downloaded and used freely, and it has already been adopted by numerous teams.

Filecoin

Using IPFS, various nodes can store data they deem important; but without a simple method or incentives for others to join the network or store data, promoting IPFS becomes difficult, which is where Filecoin was born as the incentive layer for IPFS, providing securitization.

Filecoin adds incentivized storage to IPFS. IPFS users can reliably store their data directly on Filecoin, opening the door to numerous applications and use cases for the network.