Delphi Digital Deep Report: The Endgame of Scalability from the Perspective of Modular Blockchains

Author: Can Gurel

Compiled by: Sato Xi, The Way of DeFi

Report Highlights:

- Monolithic chains are limited by the content that a single node can handle, while modular ecosystems bypass this limitation, providing a more sustainable form of scalability;

- A key motivation behind modularity is effective resource pricing. Modular chains can offer more predictable fees by dividing applications into different resource pools (i.e., fee markets);

- However, modularity introduces a new issue known as data availability (DA), which we can address in various ways. For example, Rollups can batch process off-chain data and submit it on-chain, overcoming this issue by providing "data availability" on-chain, inheriting the underlying security of the base layer, and establishing trustless L1<>L2 communication;

- The latest form of modular chains, known as dedicated data availability (DA) layers, is designed to serve as a shared security layer for Rollups. Given that DA chains have scalability advantages, they have the potential to become the ultimate solution for blockchain scalability, with Celestia being a pioneering project in this regard.

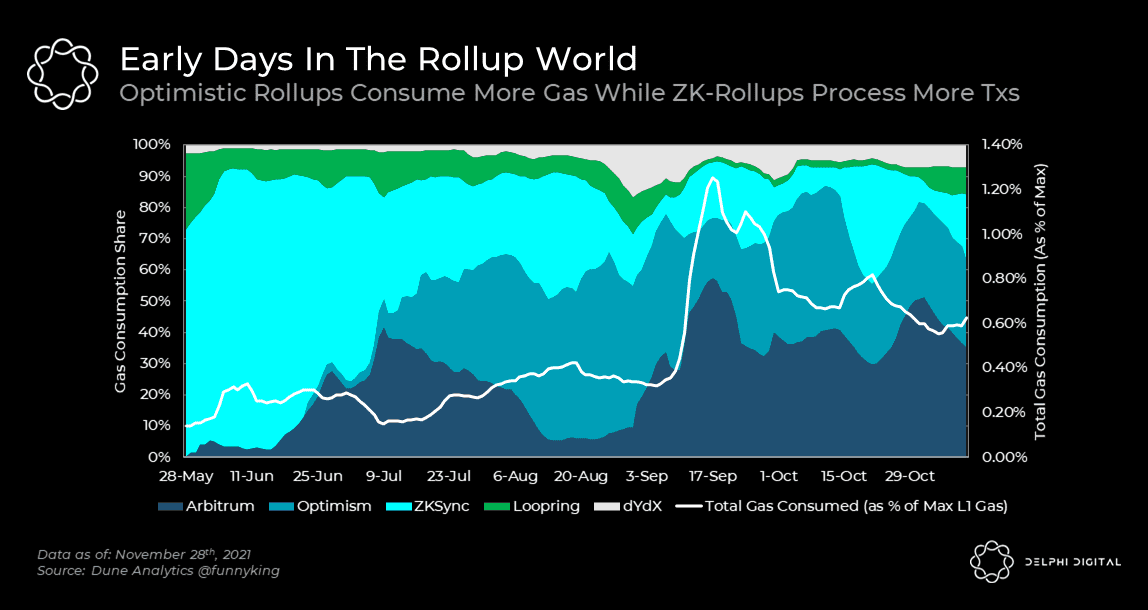

- ZK-Rollups can provide more scalability than Optimistic Rollups, as we have observed in practice. For instance, dYdX's throughput is about 10 times that of Optimism, while consuming only 1/5 of the L1 space.

On-chain activity is evolving at an extremely fast pace, accompanied by user demand for block space.

Essentially, this is a scalability war involving technical terms such as parachains, sidechains, cross-chain bridges, zones, sharding, rollups, and data availability (DA). In this article, we attempt to cut through this noise and elaborate on this scalability war. So, before you fasten your seatbelt, grab a cup of coffee or tea, as this will be a long journey.

Seeking Scalability

Since Vitalik proposed the famous scalability trilemma, there has been a misunderstanding in the crypto community that the trilemma is immutable, and everything must be a trade-off. While this is true in most cases, we occasionally see the boundaries of the trilemma being pushed (either through genuine innovation or by introducing additional but reasonable trust assumptions).

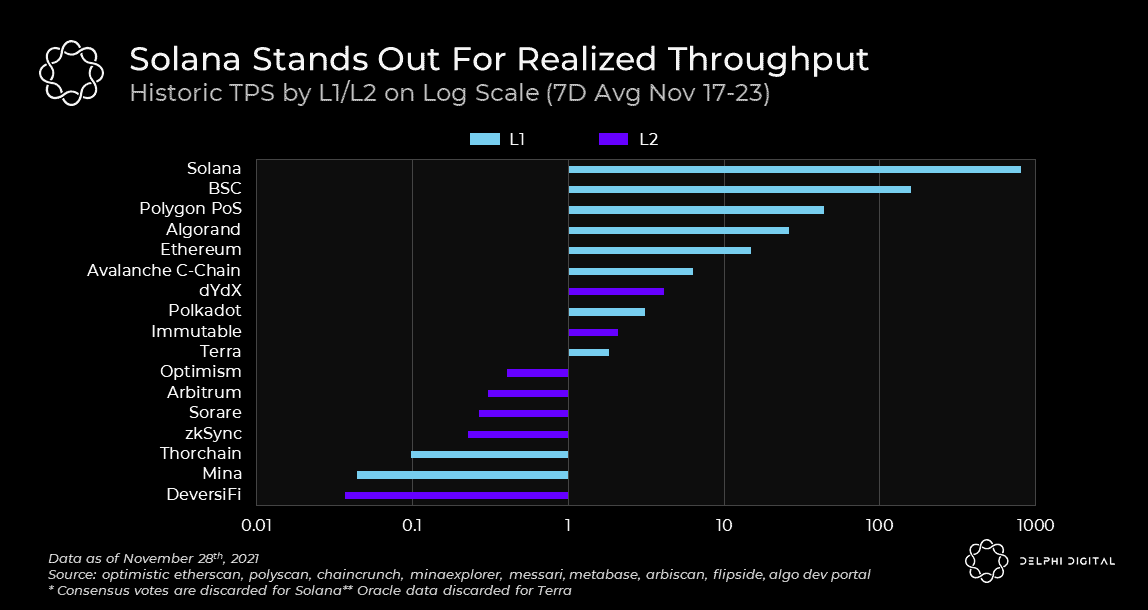

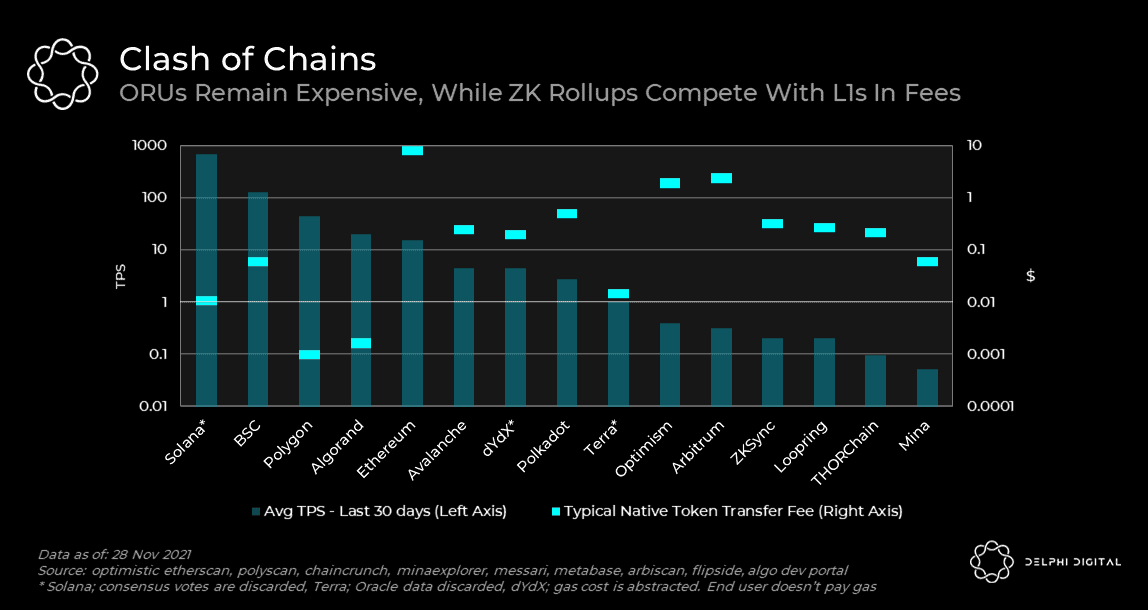

As we browse through different designs, we will highlight some examples. But before we begin, it is important to define what scalability means. In short, scalability is the ability to handle more transactions without increasing verification costs. With this in mind, let's take a look at the current TPS data of major blockchains. In this article, we will explain the design attributes that achieve these different throughput levels. Importantly, the numbers shown below are not the highest levels they can achieve but rather the actual values from the historical usage of these protocols.

Monolithic Chains vs. Modular Chains

Monolithic Blockchain

Monolithic Blockchain

First, let's look at monolithic chains. In this camp, Polygon PoS and BSC do not meet our definition of scalability, as they merely increase throughput by larger blocks (it is well known that this trade-off increases the resource demands on nodes and sacrifices decentralization for performance). While this trade-off has its market fit, it is not a long-term solution and thus is not particularly noteworthy. Polygon recognizes this and is shifting towards a rollup-centric, more sustainable solution.

On the other hand, Solana is a serious attempt at a fully composable monolithic blockchain boundary. Solana's secret weapon is called Proof of History (PoH), which aims to create a global concept of time (a global clock) where all transactions, including consensus votes, carry reliable timestamps added by the issuer. These timestamps allow nodes to make progress without waiting for each block to synchronize with each other. Solana achieves better scalability by optimizing its execution environment to process transactions in parallel rather than handling one transaction at a time like the EVM.

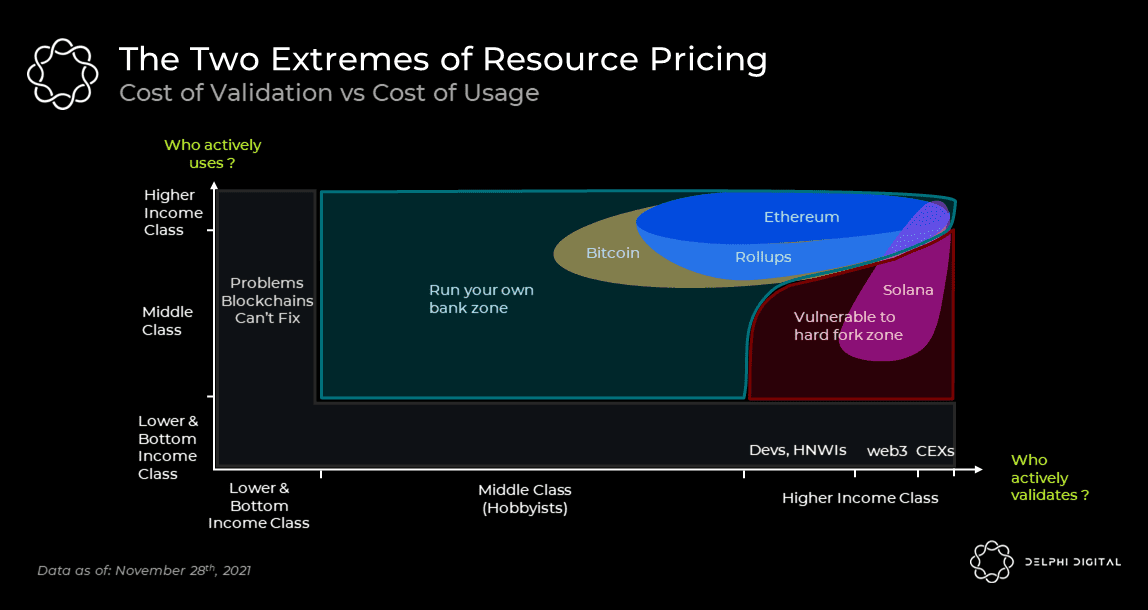

Despite Solana achieving throughput gains, it largely owes this to denser hardware and network bandwidth usage. While this reduces user fees, it limits node operations to a limited number of data centers. This contrasts with Ethereum, which, although it cannot be used by many due to high fees, is ultimately managed by its active users who can run nodes from home.

How Monolithic Blockchains Will Fail

The scalability of monolithic blockchains will ultimately be limited by the capacity of a single powerful node. Regardless of subjective views on decentralization, this capacity can only be significantly pushed before governance is restricted to a relatively small number of actors. In contrast, modular chains split the total workload among different nodes, allowing for greater throughput than any single node can handle.

Crucially, decentralization is only half of the modular picture. Another motivation behind modularity, as important as decentralization, is effective resource pricing (i.e., fees). In a monolithic chain, all transactions compete for the same block space and consume the same resources. Therefore, in the case of blockchain congestion, the market's excessive demand for a single application adversely affects all applications on the chain, as everyone's fees rise. This issue has existed since CryptoKitties congested the Ethereum network in 2017. Importantly, additional throughput has never truly solved the problem but merely postponed it. The history of the internet tells us that every increase in capacity creates space for new, unviable applications that often quickly consume the newly added capacity.

Ultimately, monolithic chains cannot self-optimize for applications with different priorities and vastly different needs. Take Solana as an example, where Kin and Serum DEX illustrate this point. Solana's low latency is suitable for applications like Serum DEX; however, maintaining such latency also requires limiting state growth, which is enforced by charging state rent for each account. This, in turn, adversely affects account-intensive applications like Kin, which cannot offer Solana's throughput to the masses due to high fees.

Looking ahead, it is naive to expect a single resource pool to reliably support a variety of crypto applications (from Metaverse, gaming to DeFi and payments). While increasing the throughput of fully composable chains is useful, we need a broader design space and better resource pricing for mainstream adoption. This is where the modular approach comes into play.

The Evolution of Blockchain

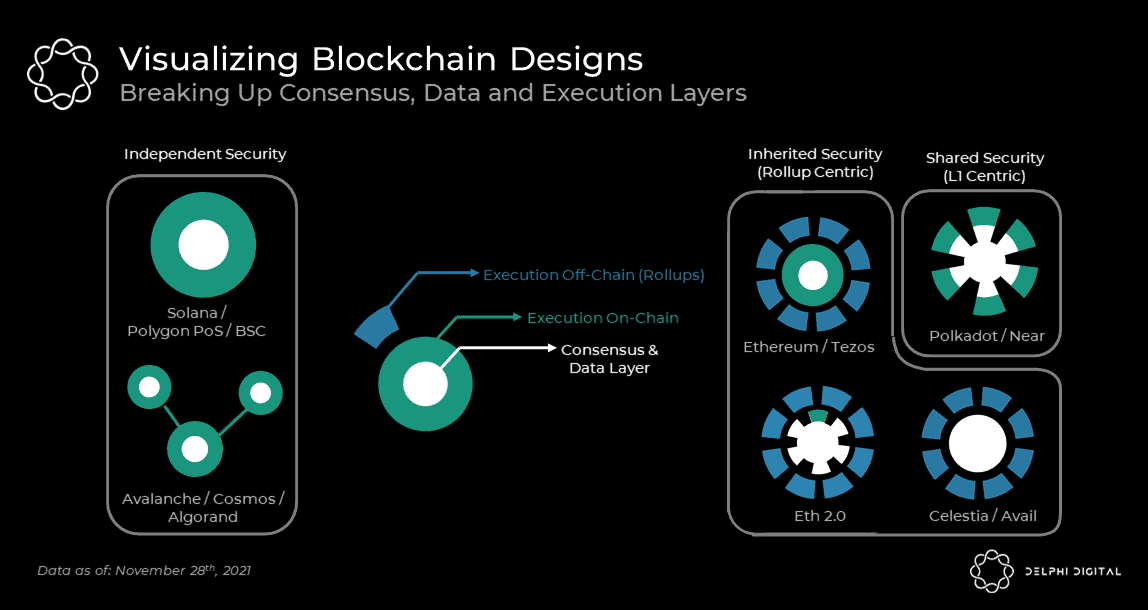

In the sacred mission of scalability, we have witnessed a trend shift from "composability" to "modularity." First, we need to define these terms: composability refers to the ability of applications to interact seamlessly with minimal friction, while modularity is the tool that breaks a system down into multiple separate components (modules) that can be freely detached and reassembled.

Ethereum Rollups, ETH 2.0 sharding, Cosmos Zones, Polkadot parachains, Avalanche Subnets, Near's Chunks, and Algorand's sidechains can all be seen as modules. Each module handles a subset of the total workload within its respective ecosystem while maintaining the ability to communicate across modules. As we delve into these ecosystems, we will notice that modular designs vary significantly in how they achieve security across modules.

Multi-chain hubs like Avalanche, Cosmos, and Algorand are best suited for independent security modules, while Ethereum, Polkadot, Near, and Celestia (a relatively new L1 design) envision modules that ultimately share or inherit each other's security.

Multi-chain/Multi-network Hub

The simplest modular design is called an interoperability hub, which refers to multiple chains/networks communicating with each other through standard protocols. Hubs provide a broader design space, allowing them to customize application-specific blockchains at many different levels, including virtual machines (VMs), node requirements, fee models, and governance. The flexibility of application chains is unmatched by smart contracts on general-purpose chains, so let's briefly review some examples:

- Terra supports a decentralized stablecoin worth over $8 billion, with a unique fee and inflation model optimized for the adoption and stability of its stablecoin.

- The cross-chain DEX Osmosis, which currently handles the highest IBC throughput, encrypts transactions until they are finalized to prevent front-running.

- Algorand and Avalanche aim to host enterprise use cases on customized networks. From CBDCs operated by government agencies to gaming networks run by gaming company committees, these are all feasible. Importantly, the throughput of such networks can be increased with more powerful machines without affecting the level of decentralization of other networks/chains.

Hubs also provide scalability advantages as they can use resources more efficiently. For example, in Avalanche, the C-Chain is used for EVM-compatible smart contracts, while the X-Chain is used for P2P payments. Since payments can often be independent of each other (Bob's payment to Charlie does not depend on Alice's payment to Dana), the X-Chain can concurrently process certain transactions. By separating the VM from the core utility, Avalanche can handle more transactions.

These ecosystems can also achieve vertical scaling through fundamental innovations. Avalanche and Algorand stand out here, as they achieve better scalability by reducing the communication overhead of consensus. Avalanche does this through a "secondary sampling voting" process, while Algorand uses inexpensive VRF nodes to randomly select a unique committee to reach consensus on each block.

Above, we have outlined the advantages of the hub approach. However, this method also encounters some key limitations. The most obvious limitation is that blockchains need to bootstrap their own security, as they cannot share or inherit each other's security. It is well known that any secure cross-chain communication requires trusted third parties or synchronization assumptions. In the case of the hub approach, the trusted third party becomes the primary validator of the counterparty chain.

For example, tokens connected from one chain to another via IBC can always be redeemed (stolen) by a malicious majority of validators on the source chain. In today's scenario, where only a few chains coexist, this majority trust assumption may work well; however, in a future where there may be a long tail of chains/networks, expecting these chains/networks to trust each other's validators for communication or shared liquidity is far from ideal. This brings us to rollups and sharding that provide cross-chain communication and offer stronger guarantees beyond majority trust assumptions.

(While Cosmos will introduce shared staking across Zones, and Avalanche allows multiple chains to be validated through the same network, these solutions have poorer scalability as they place higher demands on validators. In reality, they are likely to be adopted by the majority of active chains rather than the long tail chains.)

Data Availability (DA)

After years of research, it is widely believed that all secure shared work boils down to a very subtle issue known as data availability (DA). To understand the reasons behind this, we need a quick overview of how nodes operate in a typical blockchain.

In a typical blockchain (Ethereum), full nodes download and validate all transactions, while light nodes only check the block headers (the block summaries submitted by most validators). Therefore, while full nodes can independently detect and reject invalid transactions (e.g., infinite minting of tokens), light nodes treat anything submitted by the majority as valid transactions.

To improve this, ideally, any single full node could protect all light nodes by publishing small proofs. In such a design, light nodes could operate with similar security guarantees as full nodes without expending as many resources. However, this introduces a new issue known as data availability (DA).

If a malicious validator publishes a block header but withholds some or all transactions in the block, full nodes will not be able to determine whether the block is valid, as the missing transactions could be invalid or lead to double spending. Without this knowledge, full nodes cannot generate invalid fraud proofs to protect light nodes. In summary, for the protection mechanism to work, light nodes must ensure that validators have provided a complete list of all transactions.

The DA problem is an integral part of modular design, and when it comes to cross-chain communication, it transcends majority trust assumptions. In L2, rollups are particularly special because they do not want to evade this issue.

Rollup

In the rollup environment, we can view the main chain (Ethereum) as a light node of the rollup (Arbitrum). Rollups publish all their transaction data on L1 so that any L1 node willing to pool resources can execute them and build the rollup state from scratch. With the complete state, anyone can convert the rollup to a new state and prove the validity of the conversion by publishing validity or fraud proofs. Having available data on the main chain allows rollups to operate under a negligible single honest node assumption rather than under a majority honest assumption.

Consider the following to understand how rollups achieve better scalability through this design:

- Since any single node with the current rollup state can protect all other nodes without that state, the centralization risk of rollup nodes is lower, allowing rollup blocks to be reasonably larger.

- Even if all L1 nodes download the rollup data related to their transactions, only a small subset of nodes executes these transactions and builds the rollup state, thereby reducing overall resource consumption.

- Rollup data is compressed using clever techniques before being published to L1.

- Similar to application chains, rollups can customize their VMs for specific use cases, meaning more efficient use of resources.

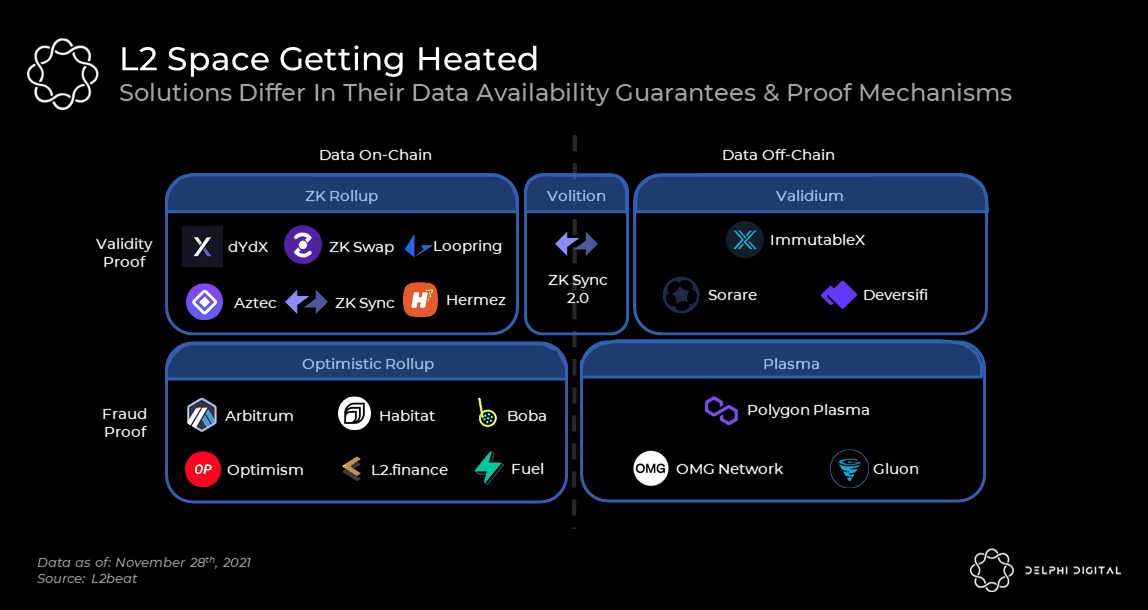

As of now, we know there are two main types of rollups: Optimistic rollups and ZK-rollups. From a scalability perspective, ZK-rollups have an advantage over Optimistic rollups because they compress data more efficiently, resulting in lower L1 space usage in certain use cases. This nuance has already been observed in practice. Optimism publishes data to L1 to reflect each transaction, while dYdX publishes data to reflect each account balance. Therefore, dYdX's L1 space usage is 1/5 that of Optimism, and it is estimated to handle throughput about 10 times greater. This advantage naturally translates into lower fees for ZK-rollup layer networks.

Unlike the fraud proofs on Optimistic rollups, the validity proofs from ZK-rollups also support a new scalability solution called volition. Although the full impact of volition remains to be seen, they seem very promising as they allow users to freely decide whether to publish data on-chain or off-chain. This enables users to determine the security level based on the type of their transactions. zkSync and Starkware will both launch volition solutions in the coming weeks/months.

Despite rollups applying clever techniques to compress data, all data still must be published to all L1 nodes. Therefore, rollups can only provide linear scalability benefits and will be limited in reducing fees; they are also highly affected by fluctuations in Ethereum gas prices. For sustainable scaling, Ethereum needs to expand its data capacity, which explains the necessity of Ethereum sharding.

Sharding and Data Availability (DA) Proofs

Sharding further relaxes the requirement for all main chain nodes to download all data, instead utilizing a new primitive called DA proofs to achieve higher scalability. With DA proofs, each node only needs to download a small portion of the sharded chain data, knowing that a small part of it can jointly reconstruct all sharded chain blocks. This achieves shared security across shards, as it ensures that any single sharded chain node can raise disputes, which are resolved by all nodes as needed. Polkadot and Near have already implemented DA proofs in their sharding designs, which will also be adopted by ETH 2.0.

At this point, it is worth mentioning how ETH 2.0's sharding roadmap differs from others. While Ethereum's initial roadmap was similar to Polkadot's, it has recently seemed to shift towards sharding data only. In other words, sharding on Ethereum will serve as the DA layer for rollups. This means Ethereum will continue to maintain a single state as it does today. In contrast, Polkadot executes all executions on a base layer with different states for each shard.

One major advantage of treating sharding as a pure data layer is that rollups can flexibly dump data across multiple shards while remaining fully composable. Thus, the throughput and fees of rollups are not constrained by the data capacity of a single shard. With 64 shards, the maximum total throughput of rollups is expected to increase from 5K TPS to 100K TPS. In contrast, regardless of how much throughput Polkadot generates overall, fees will be constrained by the limited throughput of individual parachains (1000-1500 TPS).

Dedicated DA Layers

Dedicated DA layers are the latest form of modular blockchain design. They utilize the fundamental ideas of ETH 2.0's DA layers but steer them in a different direction. A pioneering project in this area is Celestia, but newer solutions like Polygon Avail are also moving in this direction.

Similar to ETH 2.0's DA sharding, Celestia acts as a base layer where other chains (rollups) can plug in to inherit security. Celestia's solution differs from Ethereum in two fundamental ways:

- It does not perform any meaningful state execution at the base layer (whereas ETH 2.0 does). This frees rollups from highly unreliable base layer fees, which can spike due to token sales, NFT airdrops, or high-yield farming opportunities. Rollups consume the same resources (i.e., bytes in the base layer) for security and are only used for security. This efficiency allows rollup fees to be primarily associated with the specific rollup rather than the usage of the base layer.

- Due to DA proofs, Celestia can increase its DA throughput without sharding. A key feature of DA proofs is that as more nodes participate in sampling, more data can be stored. In the case of Celestia, this means that as more light nodes participate in DA sampling (without centralization), blocks can become larger (higher throughput).

Like all designs, dedicated DA layers also have some drawbacks. A direct drawback is the lack of a default settlement layer. Therefore, for rollups to share assets with each other, they must implement methods to interpret each other's fraud proofs.

Conclusion

We have evaluated different blockchain designs, including monolithic chains, multi-chain hubs, rollups, sharded chains, and dedicated DA layers. Given the relatively simple infrastructure of multi-chain hubs, broader design space, and horizontal scaling capabilities, we believe multi-chain hubs are best suited to address the urgent needs of the blockchain industry. In the long run, considering resource efficiency and unique scalability, dedicated DA layers are likely to become the ultimate solution for scalability.